The Foundation Model Transparency Index after 6 months

Authors: Rishi Bommasani and Kevin Klyman and Sayash Kapoor and Shayne Longpre and Betty Xiong and Nestor Maslej and Percy Liang

Foundation models power impactful AI systems today: Google recently announced that all of its products with at least 2 billion users now rely on the Gemini model. The 2024 AI Index shows that developers are investing hundreds of millions of dollars into building their flagship models. As this technology rises in importance, the demand for transparency escalates. Governments recognize this challenge: the US, EU, China, Canada, and G7 all have taken steps to increase transparency surrounding foundation models. In fact, the Republic of Korea and the UK are convening world leaders today to discuss these very issues.

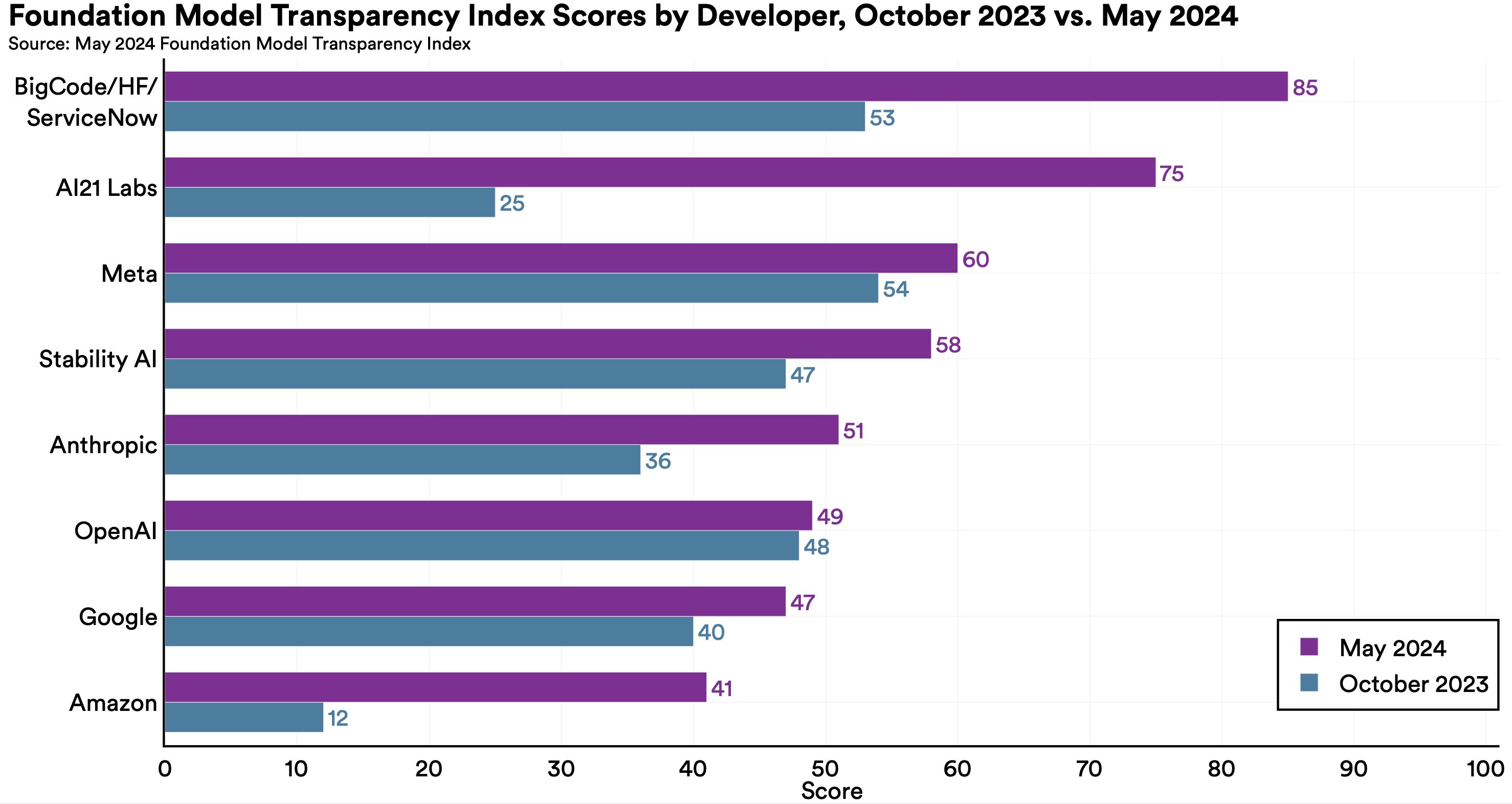

The Foundation Model Transparency Index (FMTI) was launched in October 2023. The October 2023 Index scored 10 major foundation model developers like OpenAI and Meta based on what these companies disclosed publicly. The rubric was 100 transparency indicators spanning matters like data, labor, compute, capabilities, limitations, risks, usage policies, and downstream impact. In turn, the October 2023 Index established that the ecosystem was opaque: developers scored just a 37 out of 100 on average with the top score barely eclipsing 50.

Today, we are publishing a follow up study six months later. Over this time span, we reached out to developers, requesting they submit transparency reports that affirmatively disclose their practices in relation to our 100 indicators. 14 developers agreed and we make the reports, subject to their validation, publicly available. These reports include new information the developers had not made public until we began this follow-up study; on average, each developer shared new information related to 16 indicators.

The May 2024 Foundation Model Transparency Index

We scored 14 companies, eight of which were part of the October 2023 Index (e.g. Anthropic, Amazon), and six of which are new to the Index (e.g. IBM, Mistral). Each developer designated their flagship foundation model and prepared a report disclosing information about the model (e.g. how much energy was used in training the model). We scored these disclosures against the rubrics we use for each indicator, sending the results to the companies for them to rebut the scores. After a three-month process, we finalized the transparency reports and published them as interactive resources on our website.

Overall, we find much room for improvement as the average score is a 58 out of 100: three companies are well above the average, nine are clustered around it, and two are noticeably below the average. Analyzing the results further, we find that developers score the highest on areas like the capabilities of their model and documentation for downstream deployers. In contrast, matters like data access, evaluations of model trustworthiness, and downstream impact are the most opaque.

If developers emulate their most-transparent counterparts on each indicator, we would see major gains in transparency. At least one company scores a point on 96 of the 100 indicators, and multiple companies score points on 89 indicators.

Changes from October 2023

We score companies on the same 100 indicators, with the same standard for awarding each indicator, in order to compare the October 2023 and May 2024 results. The clear trend is that developers are more transparent: the average score went up from 37 points to 58 points and the top score went up from 54 to 85. Improvement occurred across the board with all eight developers that were assessed in both iterations improving their scores. With that said, some took very large strides, like AI21 Labs going from 25 to 75 points, whereas others made more marginal changes, like OpenAI going from 48 to 49 points.

These increases are driven by companies disclosing new information via the Index. For example, companies scored just 17% of the compute-related indicators in October 2023, whereas they now score 51% on average. This change reflects that several companies now disclose the amount of compute, hardware, and energy required to build their flagship foundation model.

However, some areas of the Index demonstrate sustained and systemic opacity, meaning almost all developers do not disclose information on these matters. Specifically, developers lack transparency on data-related matters like the copyright status of the data and presence of personally identifiable information in the data. And, similarly, developers do not clarify the nature of their models’ downstream impact, such as the market sectors and countries in which their models are used and how they are used there.

Moving Forward

The Foundation Model Transparency Index is an ongoing initiative to measure, and thereby improve, transparency in the foundation model ecosystem. The May 2024 Index shows a major uptick in transparency, while showing there is clear room for improvement with specific areas showing little progress. In releasing the May 2024 Index, we furnish transparency reports validated by 14 major developers as a new resource for the community. Moving forward, we envision developers releasing transparency reports in line with recommendations from the White House and the G7. See the paper for more details.

Acknowledgements

We thank the Foundation Model Transparency Index Advisory Board for guidance. We also would like to thank the 14 model developers for engaging in this effort and Loredana Fattorini for preparing the visuals in this work.