The AI Executive Order through the lens of the AI Index

Authors: Jonathan Xue and Rishi Bommasani

Policymakers are actively grappling with how to govern AI. Last week, the EU AI Act was published as comprehensive legislation on AI. 2 months ago, policymakers and technologists from around the world convened in Seoul for the second global AI summit. As different jurisdictions design AI policy, their efforts should be grounded to the realities of AI technology.

As a case study, we align the Biden Administration’s 2023 AI Executive Order with Stanford HAI’s 2024 AI Index. The Executive Order (EO) initiates a sweeping set of requirements across the US government: the EO imposes over 150 obligations, many to be completed within the year. The AI Index illuminates the current state of AI spanning developments across research, industry, and the public at large. For each of the themes in the Executive Order, we use the AI Index as a repository for identifying relevant trends that provide useful context. Our work complements efforts to understand policy directly, for example by tracking progress on EO implementation (see the trackers from Stanford HAI and Georgetown CSET).

Takeaways.

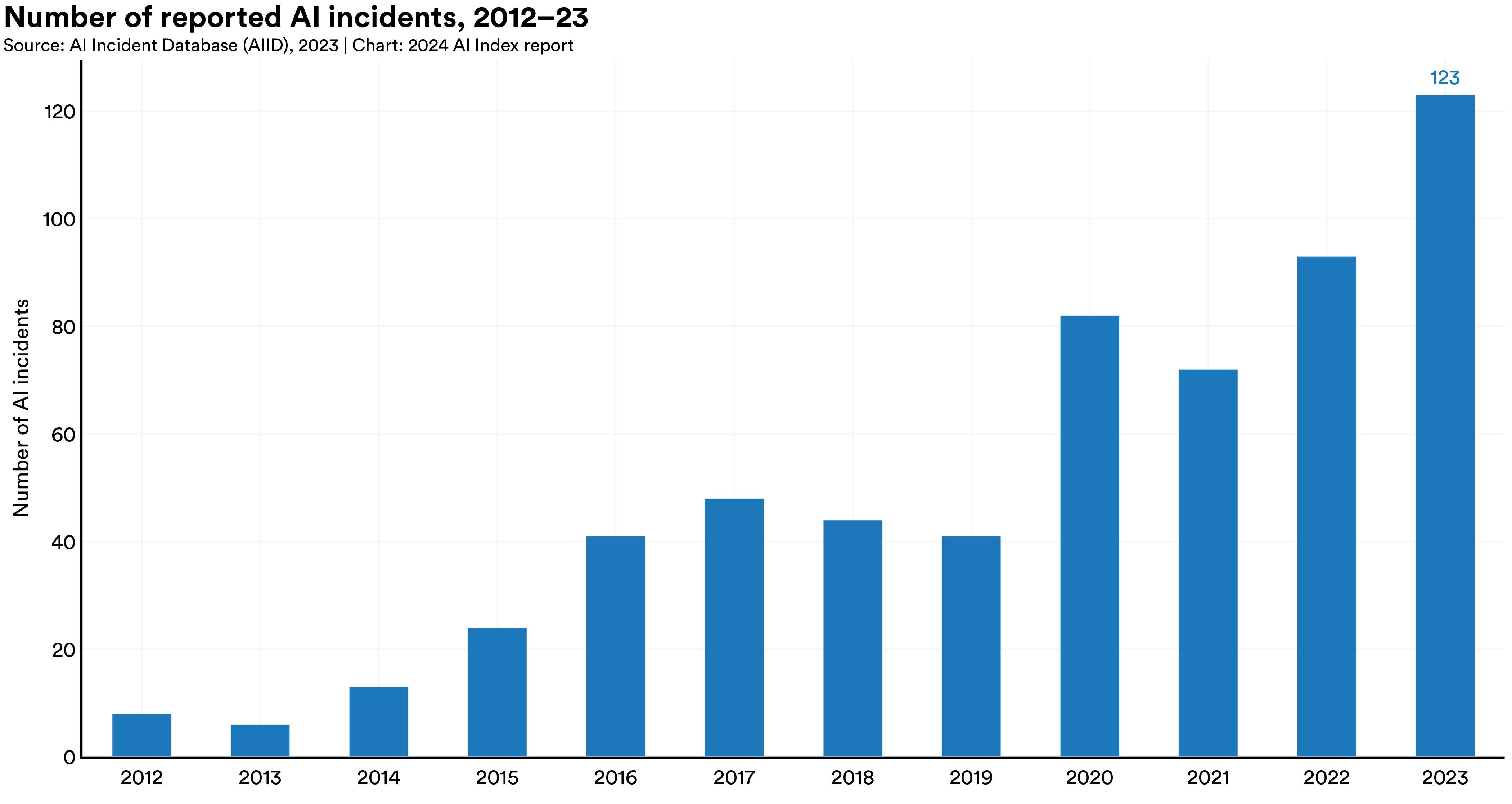

- Threat Priority: In Section 4, the EO highlights security threats from AI with a focus on CBRN threats: “To better understand and mitigate the risk of AI being misused to assist in the development or use of CBRN threats […] The Secretary of Homeland Security shall: consult with experts in AI and CBRN issues from the Department of Energy, private AI laboratories, academia, and third-party model evaluators, as appropriate, to evaluate AI model capabilities to present CBRN threats — for the sole purpose of guarding against those threats — as well as options for minimizing the risks of AI model misuse to generate or exacerbate those threats” (Sec. 4.4 (a)(i)). The emphasis placed on catastrophic risks of this kind deviates from how responsible AI is conceptualized in the AI Index. For example, the Index draws upon results from the the AI Incident Database, which documents misuse of AI. The AI Incident Database in 2023 recorded 123 such instances spanning deepfakes, voice cloning, harmful generated content, and model malfunctions. While the EO does take action towards content provenance through mandating an internal government report to the Director of OMB, these steps are fairly indecisive compared to the concrete approach taken for preventing CBRN, such as directly monitoring developers of advanced AI models and regulating the synthetic nucleic acid pipeline. Overall, there is a disconnect between the demonstrated harms as shown in the AI Index and the more speculative large-scale risks being addressed in section 4 of the EO.

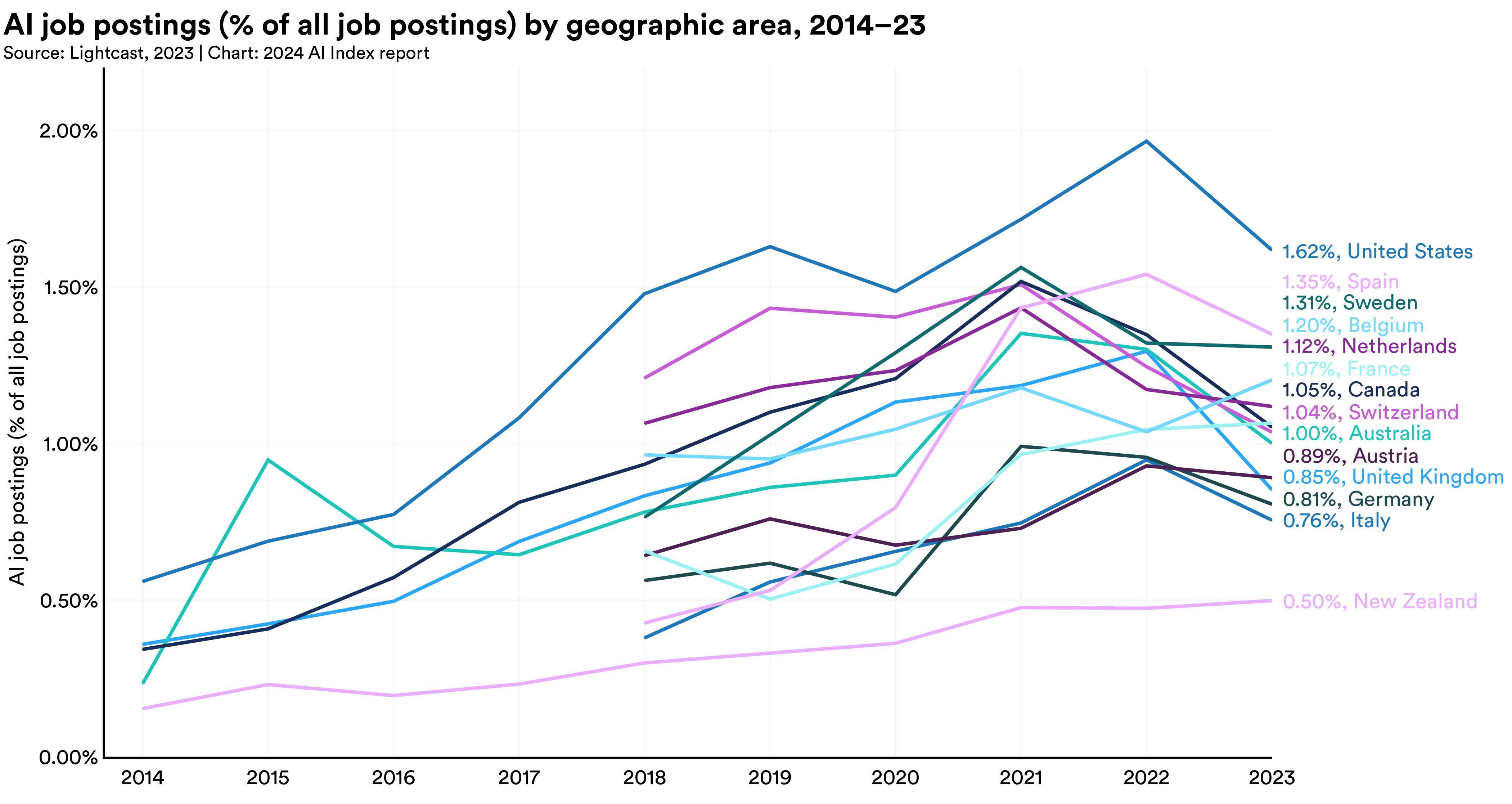

- Global Innovation: Attracting and retaining AI talent in the US is a major strategic objective. To achieve these goals, the EO modifies immigration policy to streamline visa applications for AI workers and increase the likelihood of visa renewals. Additionally, the government will fund more AI research to catalyze domestic innovation and recruit foreign talent. Based on LinkedIn data, the AI Index documents that AI talent is very fluid: individuals are willing to emigrate for better job opportunities and the US is the world leader in percentage of AI-related job postings (1.62% in 2023). Therefore, the EO likely will further reinforce US strength in the AI-related job market.

- Interpretation of Fairness: The EO names four concrete areas in its treatment of discrimination: criminal justice, public benefits, AI-based hiring systems, and algorithmic housing decisions. This emphasis on allocative decision making reflects the importance of the direct means by which AI affects individual welfare. In contrast, research on fairness often considers whether AI-generated content is non-toxic and widely representative. While both the US government and the AI research community study fairness, the relative emphasis on allocation vs. representation appears to diverge.

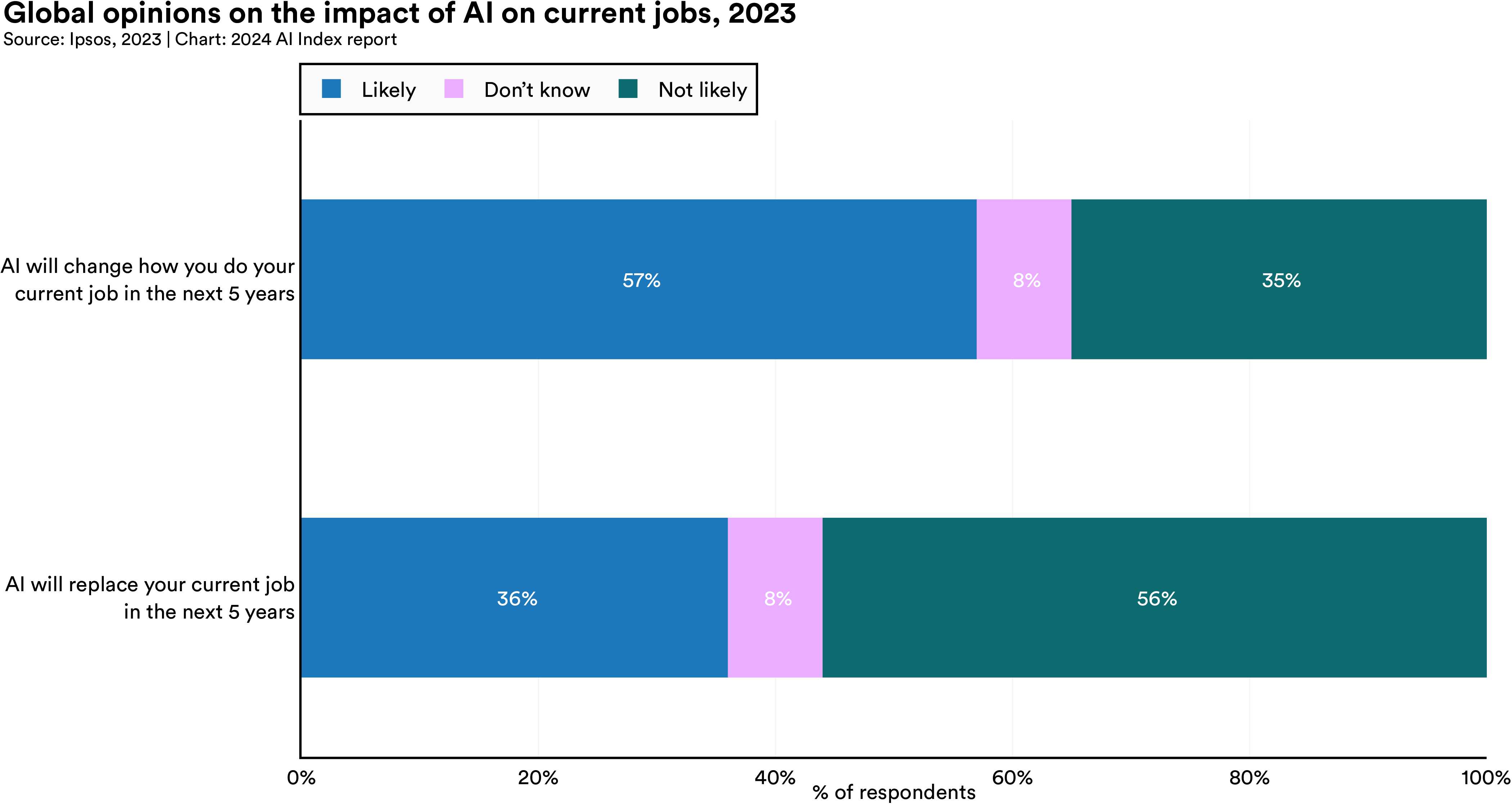

- AI in the Workforce: According to the AI Index, the general public is very concerned about AI-driven labor market disruption. Based on a 2023 Ipsos survey, 36% of respondents believe it is likely that AI will replace their job in the next five years. The EO specifically addresses this issue, with the Department of Labor being tasked to deliver a secondary report to the President on worker displacement due to AI. Possible future actions include expansions of unemployment insurance to cover such cases, along with additional federal support to workers negatively affected by AI automation.

Methodology. Within the EO, we identify the central themes for each top-level section that outlines specific government obligations. Using these themes, we select the most related figures or analyses in the AI Index. In this way, the Executive Order provides a lens for sifting through the wealth of information in the AI Index.

Through the context of the AI Index, we provide an analysis of each pertinent section for the EO:

Section 2 - Policy and Principles

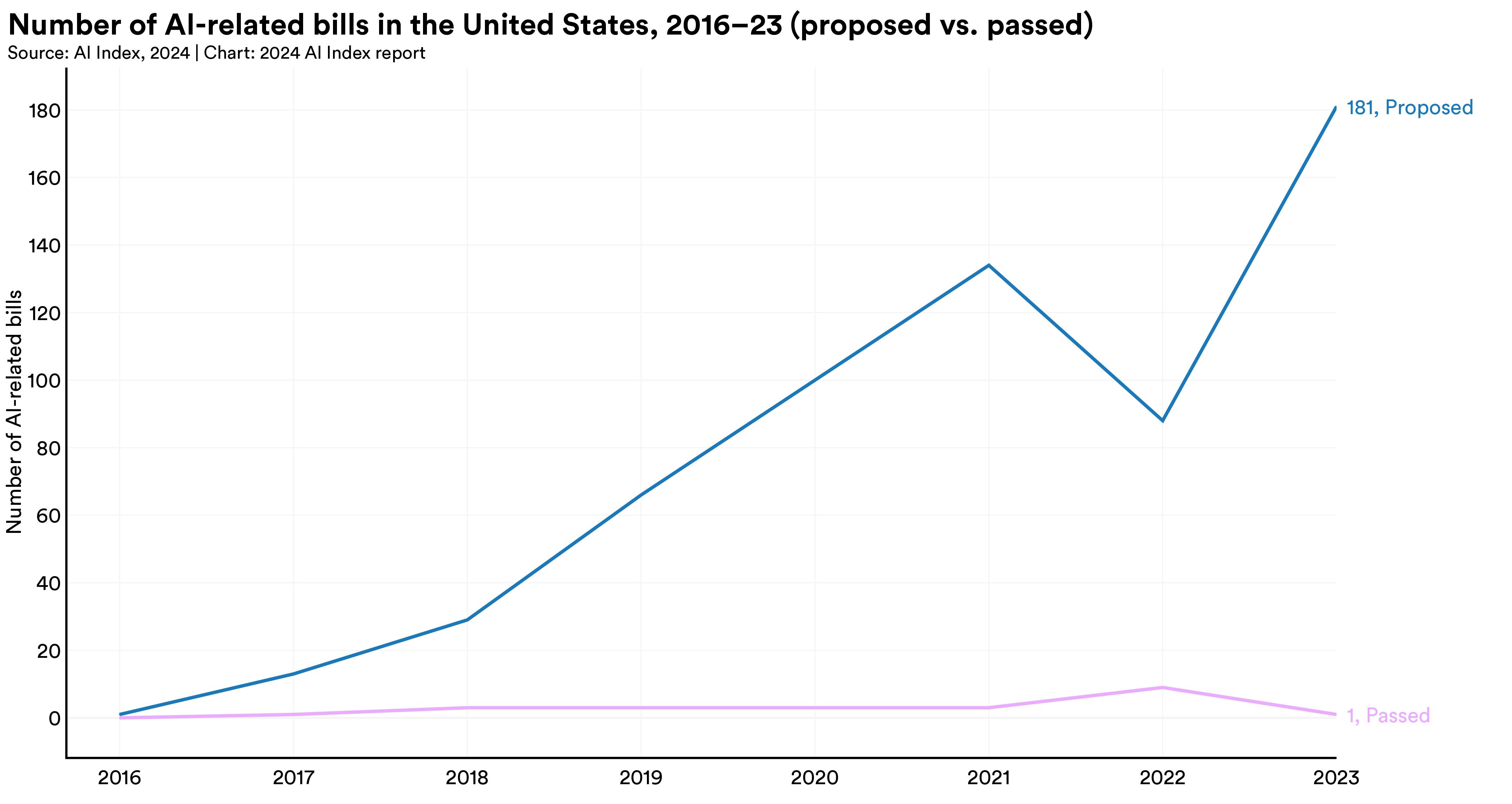

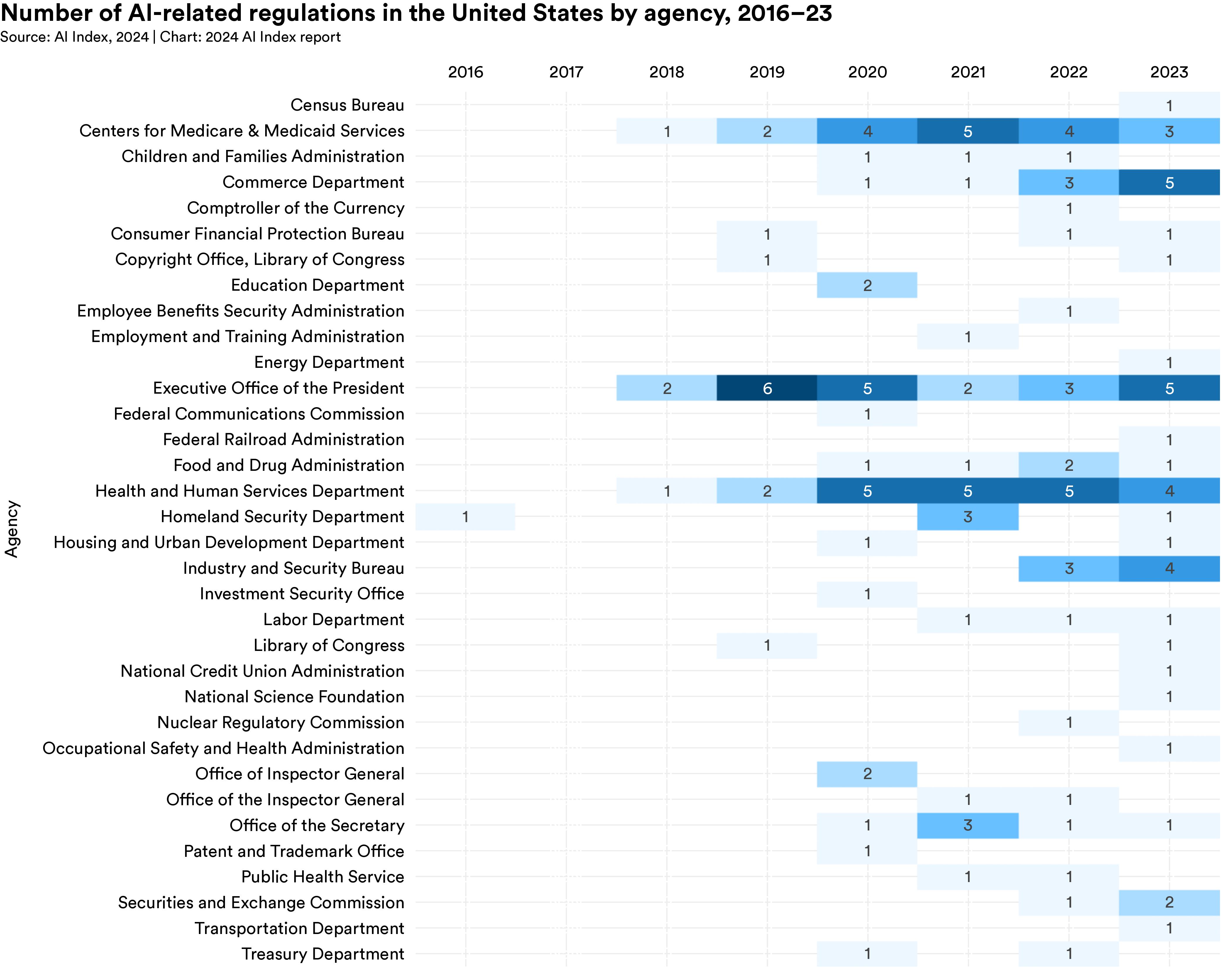

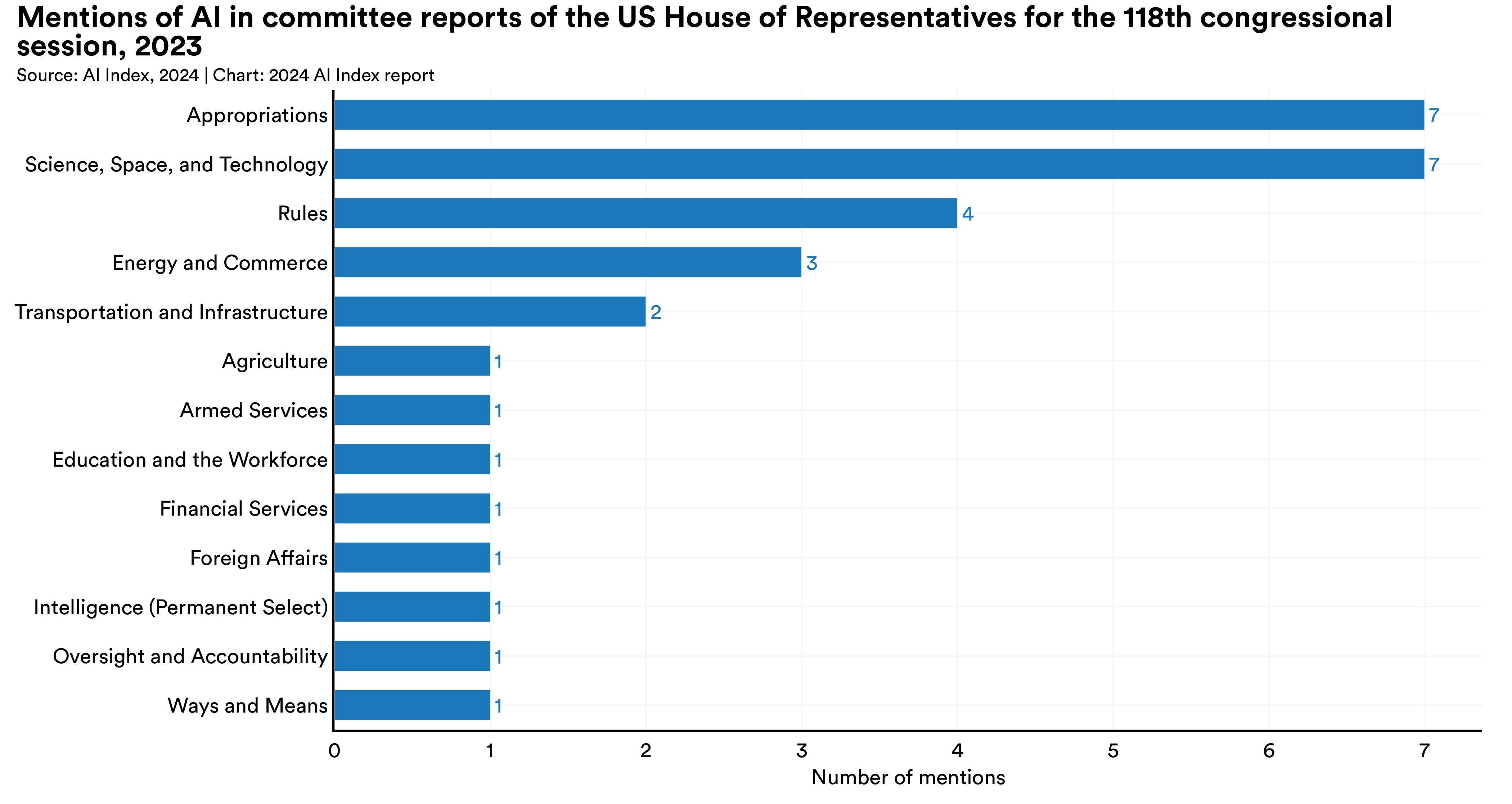

Summary - The AI Index Report shows an increasing interest in AI across US policymakers, with a greater number of proposed AI bills, more AI regulations enacted by government departments, and multiple mentions of AI in Congressional reports. These trends align with the EO’s message of stepping up to address a changing AI climate, along with demonstrating a meaningful commitment to remain competitive on a global scale.

“When undertaking the actions set forth in this order, executive departments and agencies shall, as appropriate and consistent with applicable law, adhere to these principles […] AI policies must be consistent with my Administration’s dedication to advancing equity.” (EO Sec. 2.d)

“It is important to manage the risks from the Federal Government’s own use of AI and increase its internal capacity to regulate, govern, and support responsible use of AI to deliver better results for Americans.” (EO Sec. 2.g)

“The Federal Government should lead the way to global societal, economic, and technological progress, as the United States has in previous eras of disruptive innovation and change.” (EO Sec. 2.h)

Section 4 - Ensuring the Safety and Security of AI Technology

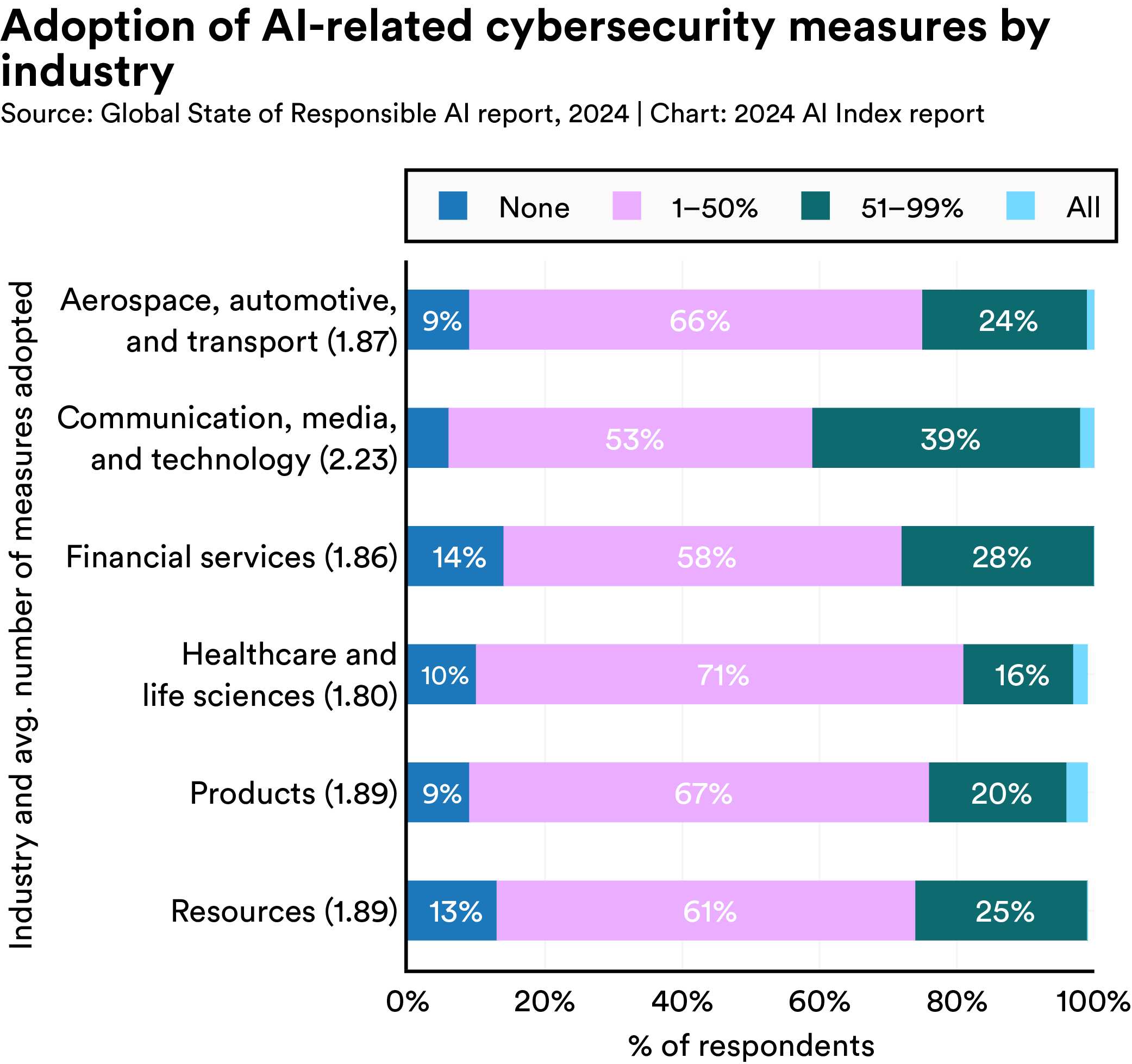

Summary - An all-time high of reported AI incidents, in regards to abusive usage of AI and misinformation/bias, has driven a broad push to create safe and secure AI models across academia, industry, and government. Researchers increasingly use benchmarks like TruthfulQA and RealToxicityPrompts to measure these risks. Industry is strengthening cybersecurity practices to defend against external attacks on user data. AI developers are also bolstering their generative AI offerings against malicious actors (see the report on generative AI misuse from Google Deepmind). Meanwhile, government has amped up defense spending to fund research for military applications of AI. It is worthy to note that the incidents reported by the AI index are misuses or failings, and do not coincide with the government’s interest in large-scale CBRN threats.

“To help ensure the development of safe, secure, and trustworthy AI systems […] shall establish guidelines and best practices, with the aim of promoting consensus industry standards, for developing and deploying safe, secure, and trustworthy systems.” (EO Sec. 4.1.a.i)

“Shall evaluate and provide to the Secretary of Homeland Security an assessment of potential risks related to the use of AI in critical infrastructure sectors involved, including ways in which deploying AI may make critical infrastructure systems more vulnerable to critical failures, physical attacks, and cyber attacks.” (EO Sec. 4.3.a.i)

Section 5 - Promoting Innovation and Competition

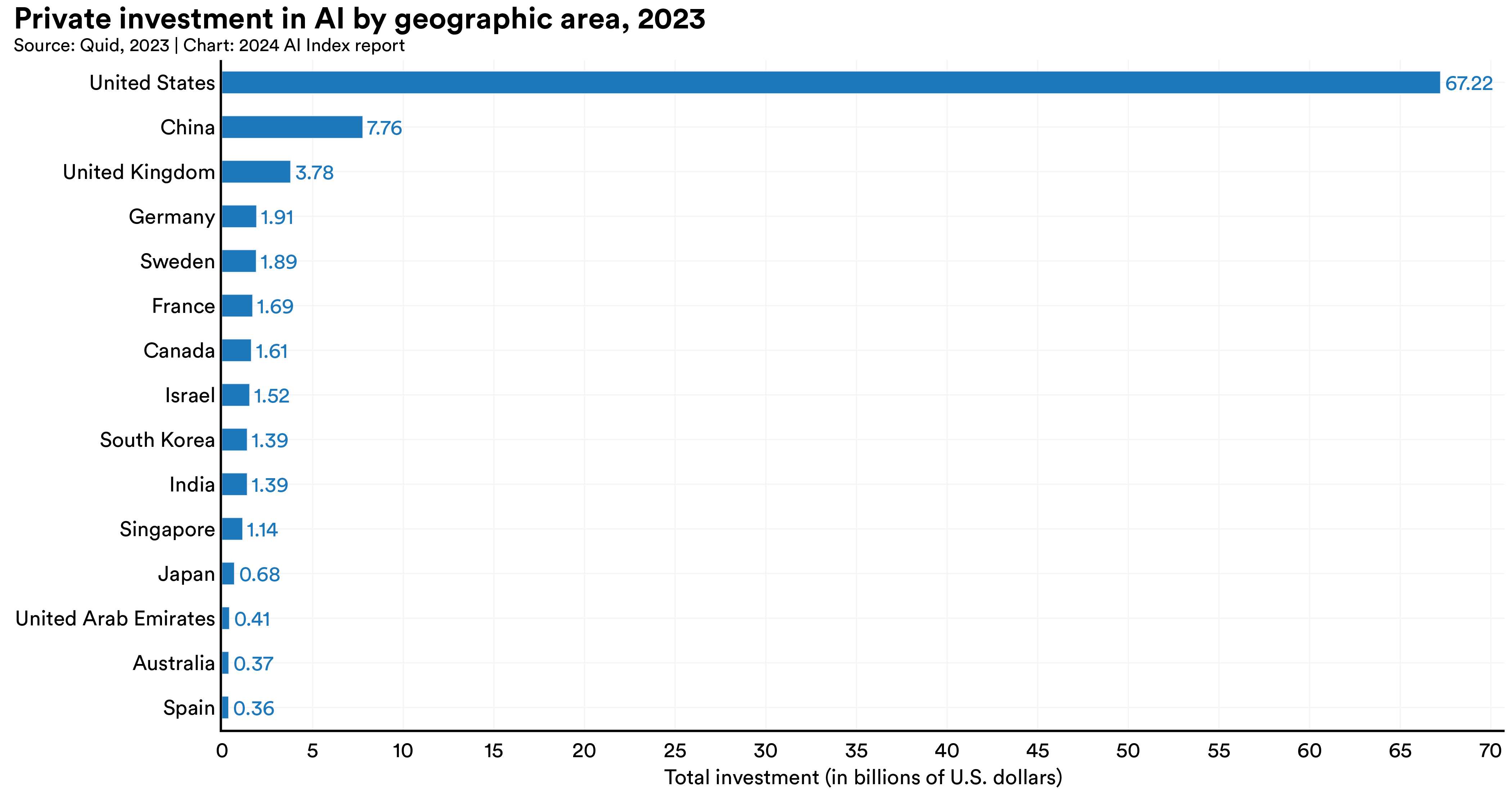

Summary - As AI becomes an increasingly globalized pursuit, countries compete to attract AI experts for both governance and development. The primary way to appeal to those in AI industry is through higher funding, which is shown by the United States’ aggressive AI investment in the private sector. The US also currently leads in terms of percentage of job postings being AI-related. Despite these efforts, other countries have shown success in acquiring global AI talent, a result of the growing global market.

“To develop and strengthen public-private partnerships for advancing innovation, commercialization, and risk-mitigation methods for AI […] shall fund and launch at least one NSF Regional Innovation Engine that prioritizes AI-related work.” (EO Sec 5.2.a.ii)

“Shall […] promote competition by increasing, where appropriate and to the extent permitted by law, the availability of resources to startups and small business, including: funding for physical assets.” (EO Sec. 5.3.b.iii)

Section 6 - Supporting Workers

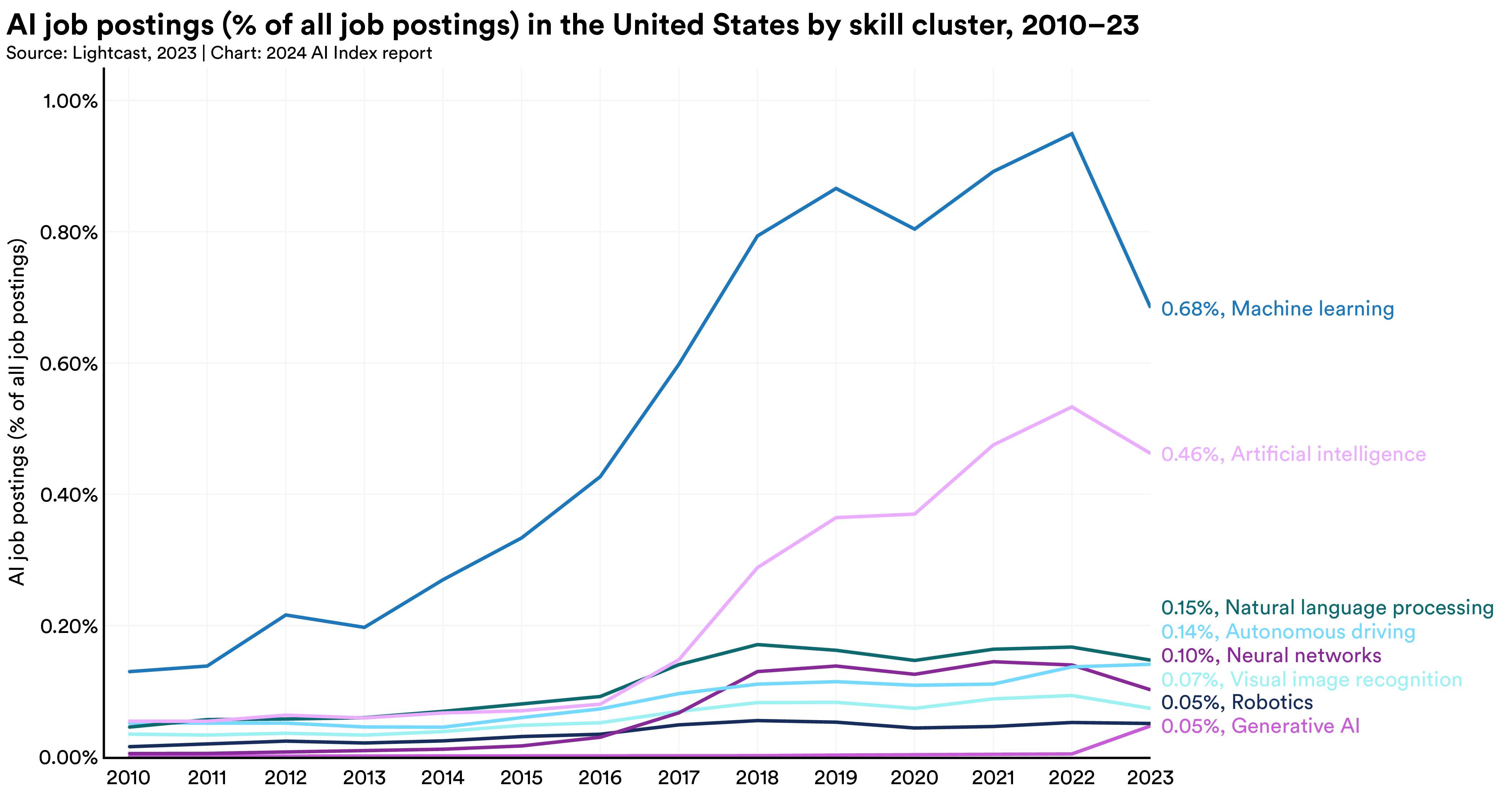

Summary - The Executive Order bolsters the Administration’s commitment to supporting workers amidst continued integration of AI across industry sectors. Within the AI market, an increasing number of postings target Machine Learning and Artificial Intelligence skills. For workers at large, sentiments are mixed about how AI will affect their job, with over half agreeing that AI will change how they do their job and over one-third believing that AI will soon replace their job.

“To foster a diverse AI-ready workforce, […] shall prioritize available resources to support AI-related education and AI-related workforce development through existing programs.” (EO Sec. 6.c)

“To help ensure that AI deployed in the workplace advances employees’ well-being […] shall develop and publish principles and best practices for employers that could be used to mitigate AI’s potential harms to employees’ well-being and maximize its potential benefits.” (EO Sec. 6.b.i)

Section 7 - Advancing Equity and Civil Rights

Summary - The Executive Order highlights discrimination in AI as a primary concern in the job market and in lending practices. While these allocative contexts are often used as motivating examples in fairness research, neither is frequently studied in empirical AI research. Researchers increasingly focus on eliminating biases that develop during the AI training process, such as implicit gender-based or racial associations. While researchers have posited that these biases can later manifest in hiring algorithms and loan assignment algorithms, often these relationships have yet to be rigorously established. This disconnect between how governments conceptualize discrimination and how researchers study discrimination makes it difficult to align the figures of the AI Index with the EO language.

Section 8 - Protecting Consumers, Patients, Passengers, and Students

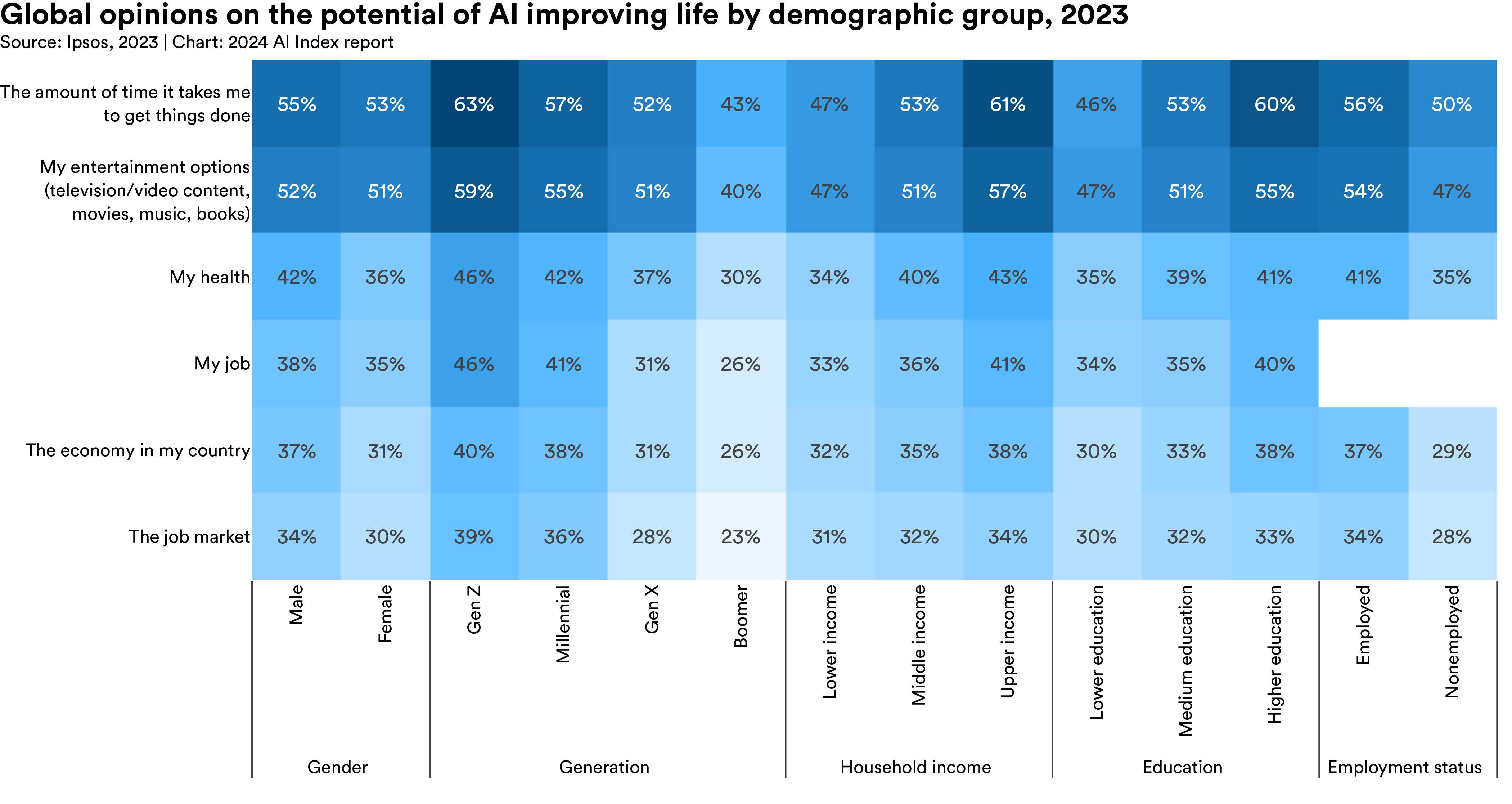

Summary - As AI is deployed widely, the government aims to protect individuals from adverse impacts. People have mixed opinions on the benefit of various AI applications, but generally have a negative sentiment on AI’s ability to maintain data privacy. Across demographic groups, most maintain mixed thoughts on AI’s ability to improve their lives, but generally agree that AI improves efficiency and entertainment options. The Executive Order outlines stricter regulatory practices around AI’s involvement in the health and human services sector, such as drug development, in addition to monitoring of data privacy practices.

“Encouraged to consider actions related to how AI will affect communications networks and consumers, including by […] providing support for efforts to improve network security, resiliency, and interoperability using next-generation technologies.” (EO Sec. 8.e)

Section 9 - Protecting Privacy

Summary - The Executive Order outlines a plan for mitigating AI risks to consumer privacy, along with evaluating how government agencies access user data. Neither of these goals are easily quantifiable through a graph in the AI Index Report, as how much consumer data AI developers have obtained and how much data government organizations obtain are both unknown.

“Shall develop resources, policies, and guidance regarding AI. These resources shall address safe, responsible, and nondiscriminatory uses of AI in education, including the impact AI systems have on vulnerable and underserved communities.” (EO Sec. 8.d)

Section 10 - Advancing Federal Government Use of AI

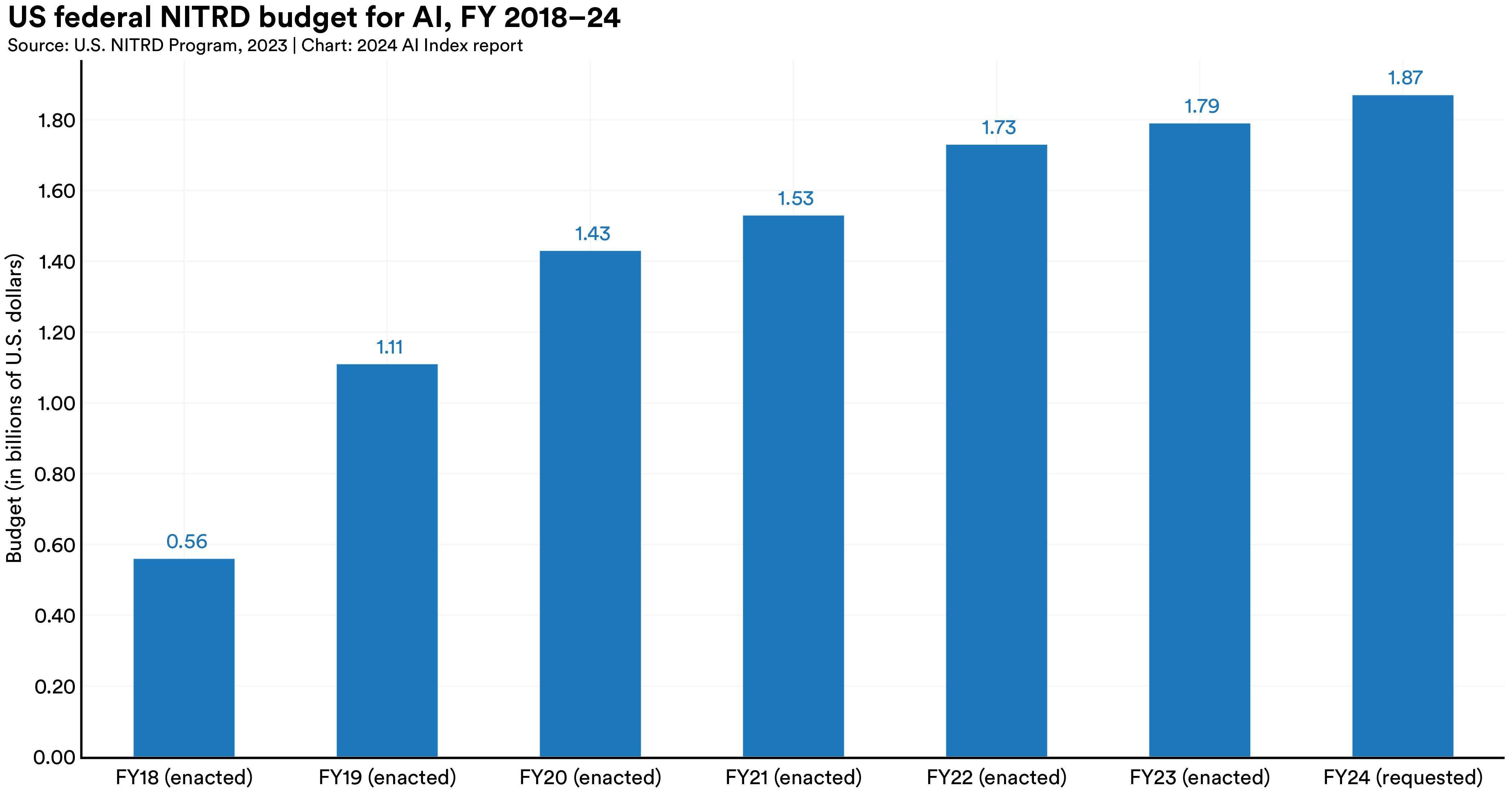

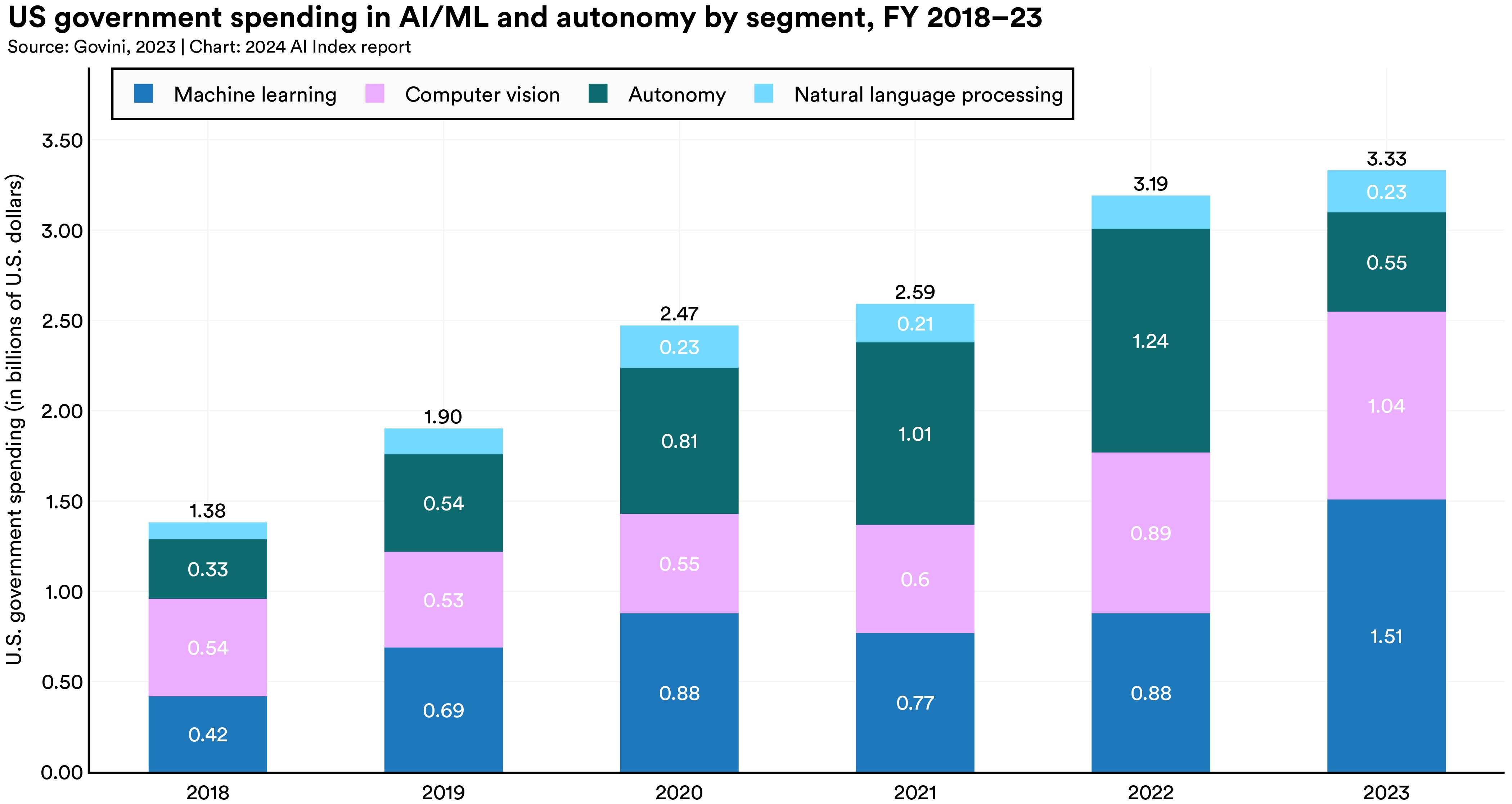

Summary - Beyond enforcing the usage of AI by external agencies, the Executive Order also outlines a plan to expand federal government usage of AI applications. The Networking and Information Technology Research and Development (NITRD) program has continually requested greater amounts of funding for AI work, an effect of this transition. Meanwhile, government spending in AI as a whole has also risen, up to 3.3 billion last year.

“To provide guidance on Federal Government use of AI […] shall issue guidance to agencies to strengthen the effective and appropriate use of AI, advance AI innovation, and manage risks from AI in the Federal Government.” (EO Sec. 10.1.b)

“To advance the responsible and secure use of generative AI in the Federal Government […] As generative AI products become widely available and common in online platforms, agencies are discouraged from imposing broad general bans or blocks on agency use of generative AI.” (EO Sec. 10.1.f.i)

Section 11 - Strengthening American Leadership Abroad

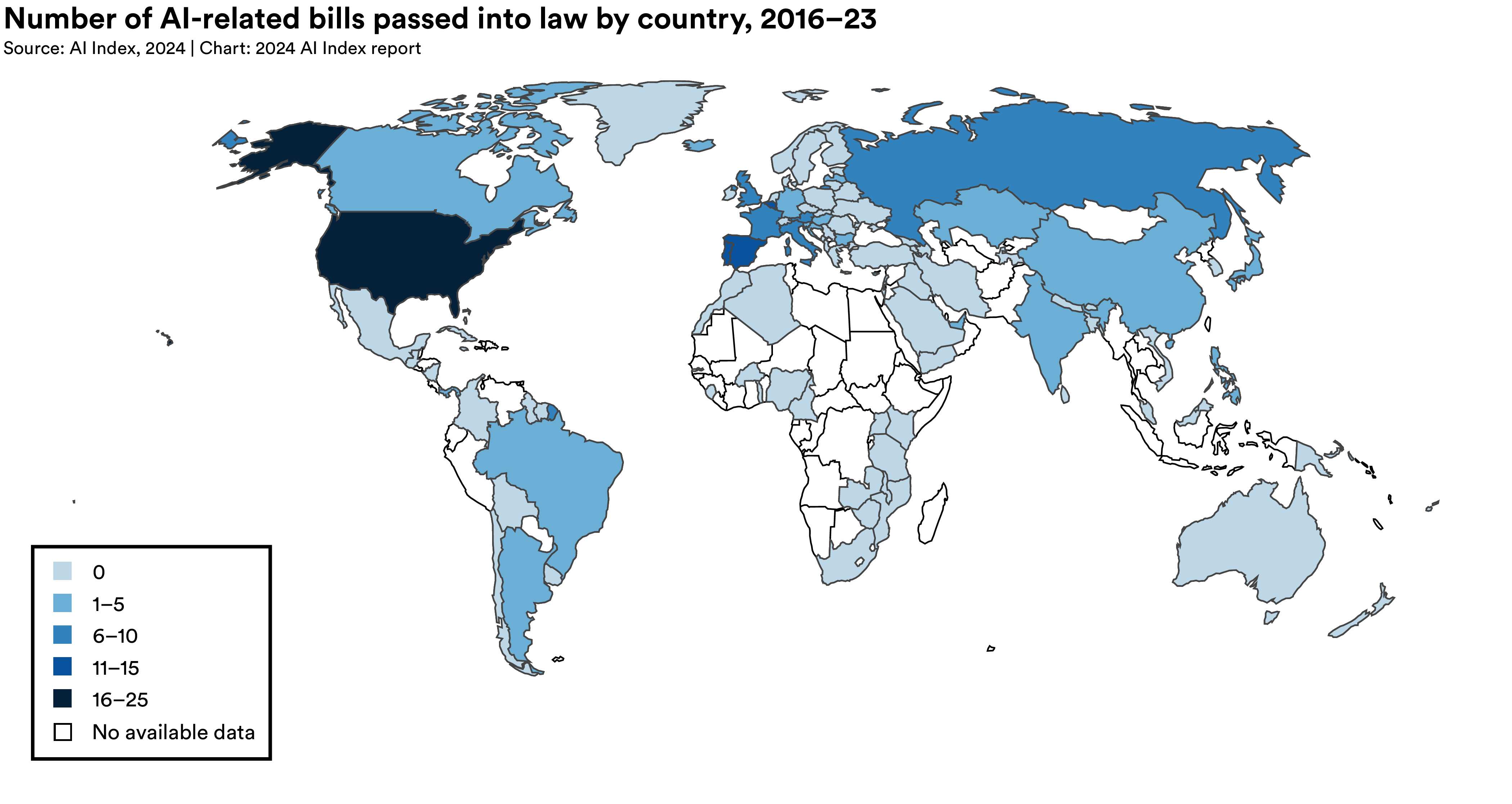

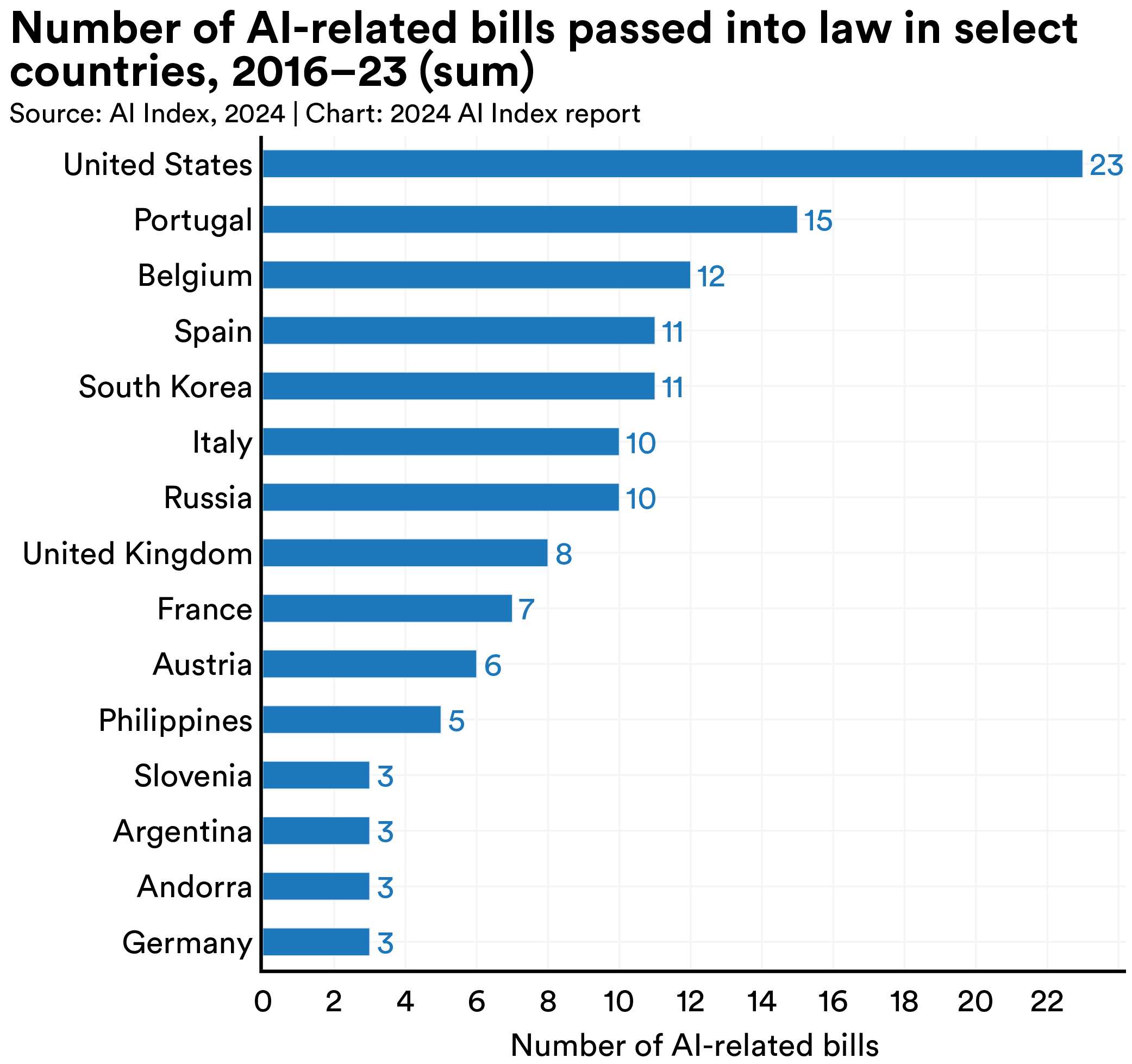

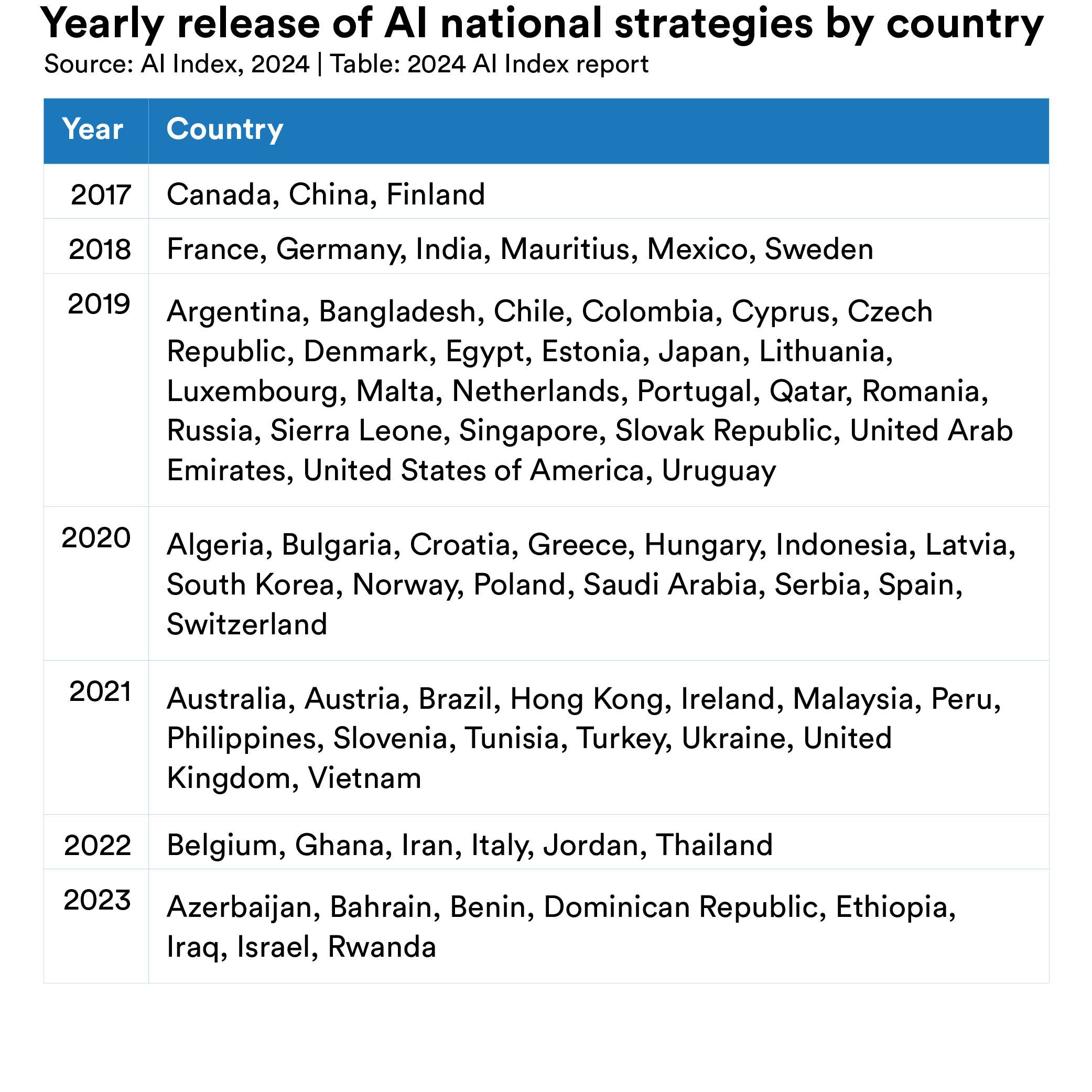

Summary - AI governance and policy has become an increasingly collaborative endeavor, as the US works with counterparts in the EU, UK, and Japan, among others, to design regulation. The United States still maintains the highest number of AI-related bills passed into law among any country, and seeks to use this position to help guide other countries with AI policy. Since the release of the US national AI strategy in 2019, many nations have developed their own national strategies.

“Lead preparations for a coordinated effort with key international allies and partners […] to drive the development and implementation of AI-related consensus standards, cooperation and coordination, and information sharing.” (EO Sec. 11.b.)

“To strengthen United States leadership of global efforts to unlock AI’s potential and meet its challenges […] shall lead efforts outside of military and intelligence areas to expand engagements with international allies and partners in relevant bilateral, multilateral, and multi-stakeholder fora.” (EO Sec. 11.a.ii)

“Shall develop a Global AI Research Agenda to guide the objectives and implementation of AI-related research in contexts beyond United States borders. The Agenda shall include principles, guidelines, priorities, and best practices aimed at ensuring the safe, responsible, beneficial, and sustainable global development and adoption of AI.” (EO Sec. 11.c.ii)

Acknowledgements

We thank the AI Index Team for their work on the 2024 AI Index Report. All visuals are directly from the AI Index and are publicly available.