The Foundation Model Transparency Index

A comprehensive assessment of the transparency of foundation model developers

Paper (December 2025) Transparency Reports Data Board Press

A comprehensive assessment of the transparency of foundation model developers

Paper (December 2025) Transparency Reports Data Board Press

The 2025 edition is the first year where the indicators change. Drawing on insights from the past two FMTI editions and changes in the practice of foundation model development, we make significant changes to the transparency indicators. This includes: raising the bar to ensure that the disclosed information is useful, adding indicators to reflect new salient topics, and targeting organizational practices to focus on the AI companies rather than just the technologies they build.

Like in 2024, we ask companies to provide us with a report and proactively disclose information about the transparency indicators. While the majority of companies did so, some key companies did not. Therefore, we manually gather information about 6 companies (Alibaba, Anthropic, DeepSeek, Midjourney, Mistral, xAI) as the basis for our scoring. For these 6 companies, we additionally augment the manual collection of information with an AI agent to identify relevant content that the FMTI team may have overlooked.

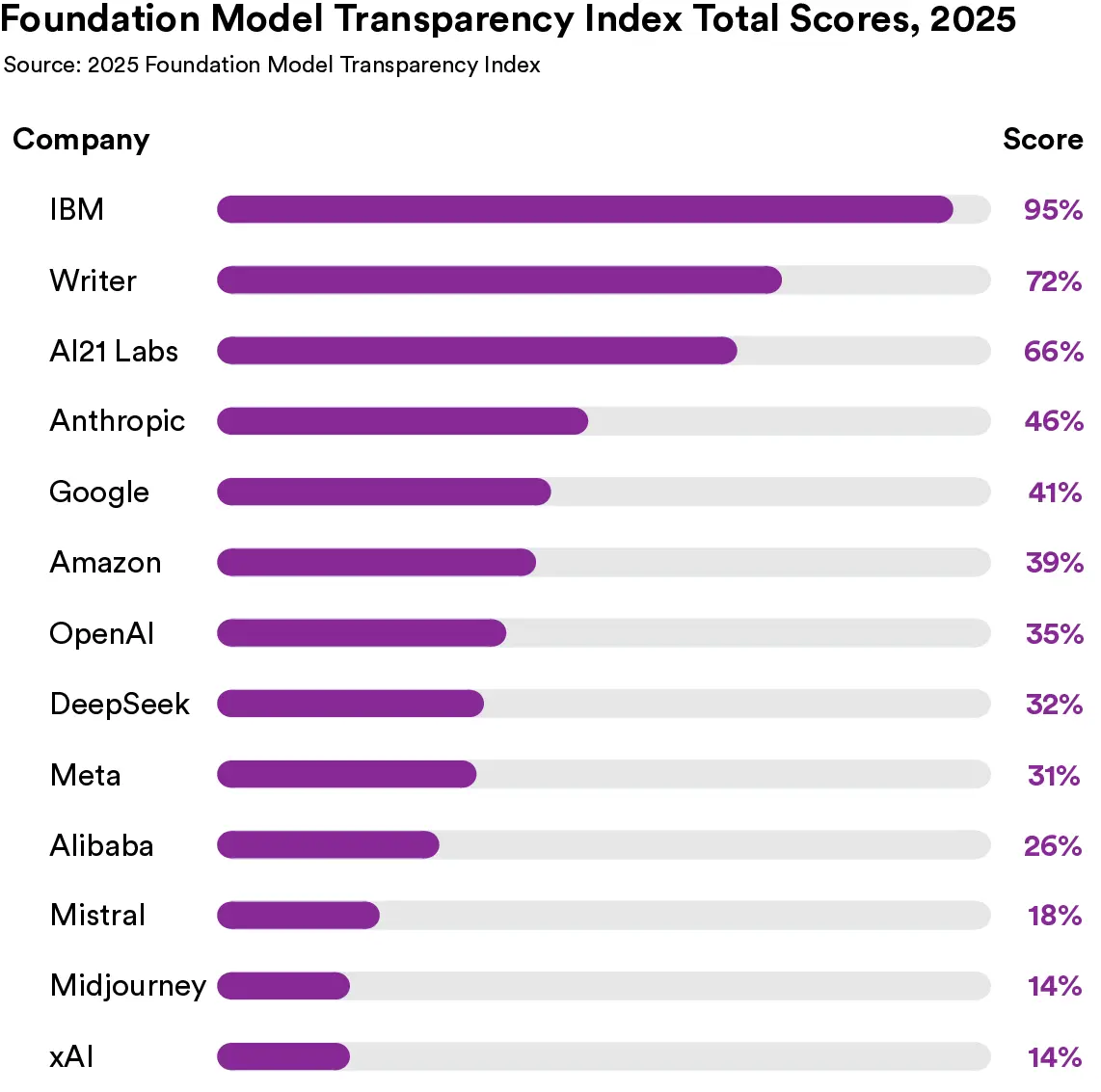

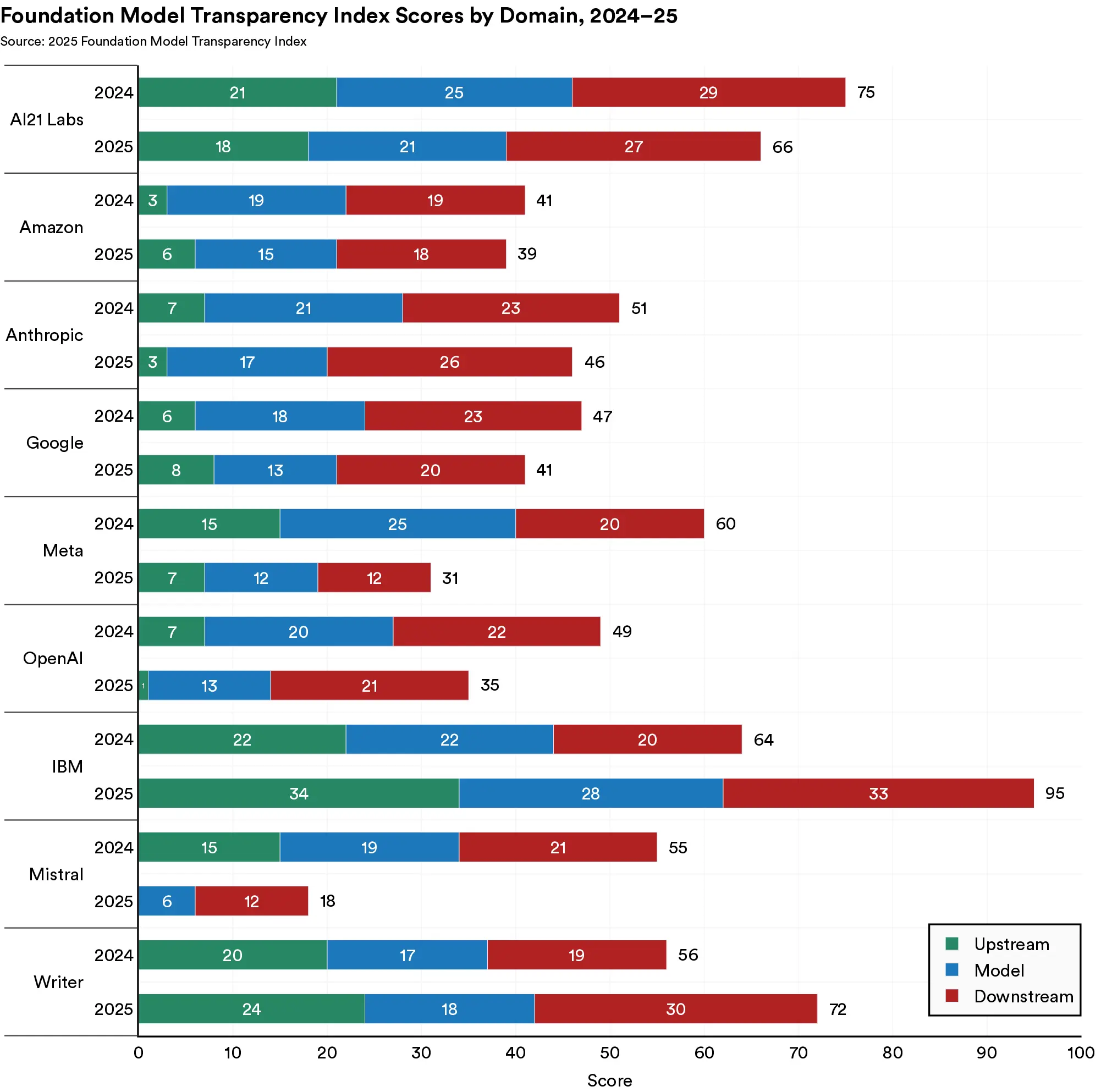

Rankings flip from 2023 to 2025. Six companies were scored across all three years. Of these six, Meta and OpenAI drop from 1st and 2nd position in 2023 to the 5th and 6th in 2025. On the other hand, AI21 and Anthropic rose from the 4th and 5th position in 2023 to the 1st and 2nd in 2025.

Training data continues to be opaque. In 2023 and 2024, companies tended to score poorly in the Data subdomain, being opaque about topics like copyright, licenses, and PII. This continues to be the case in 2025: companies tend to score poorly in the Data Acqusition and Data Properties subdomains.

Many companies do not disclose basic information about the model itself. Amazon, Google, Midjourney, Mistral, OpenAI and xAI do not score any indicators in the model information subdomain, such as the basic model information indicator (which includes input modality, output modality, model size, model components, and model architecture).

Openness correlates with—but isn't sufficient for—transparency. The 5 open-weight developers outscore the 8 closed-weight developers overall and on every domain. However, high-profile open model developers are still quite opaque: Alibaba, DeepSeek, and Meta all score in the bottom half of companies.

US companies score higher on-average (but also hold the two lowest scores). The discrepancies between the 9 US companies and the 4 non-US companies are driven by the downstream domain, specifically highlighting geographic differences in disclosures about usage data, post-deployment impact measurement, and accountability mechanisms.

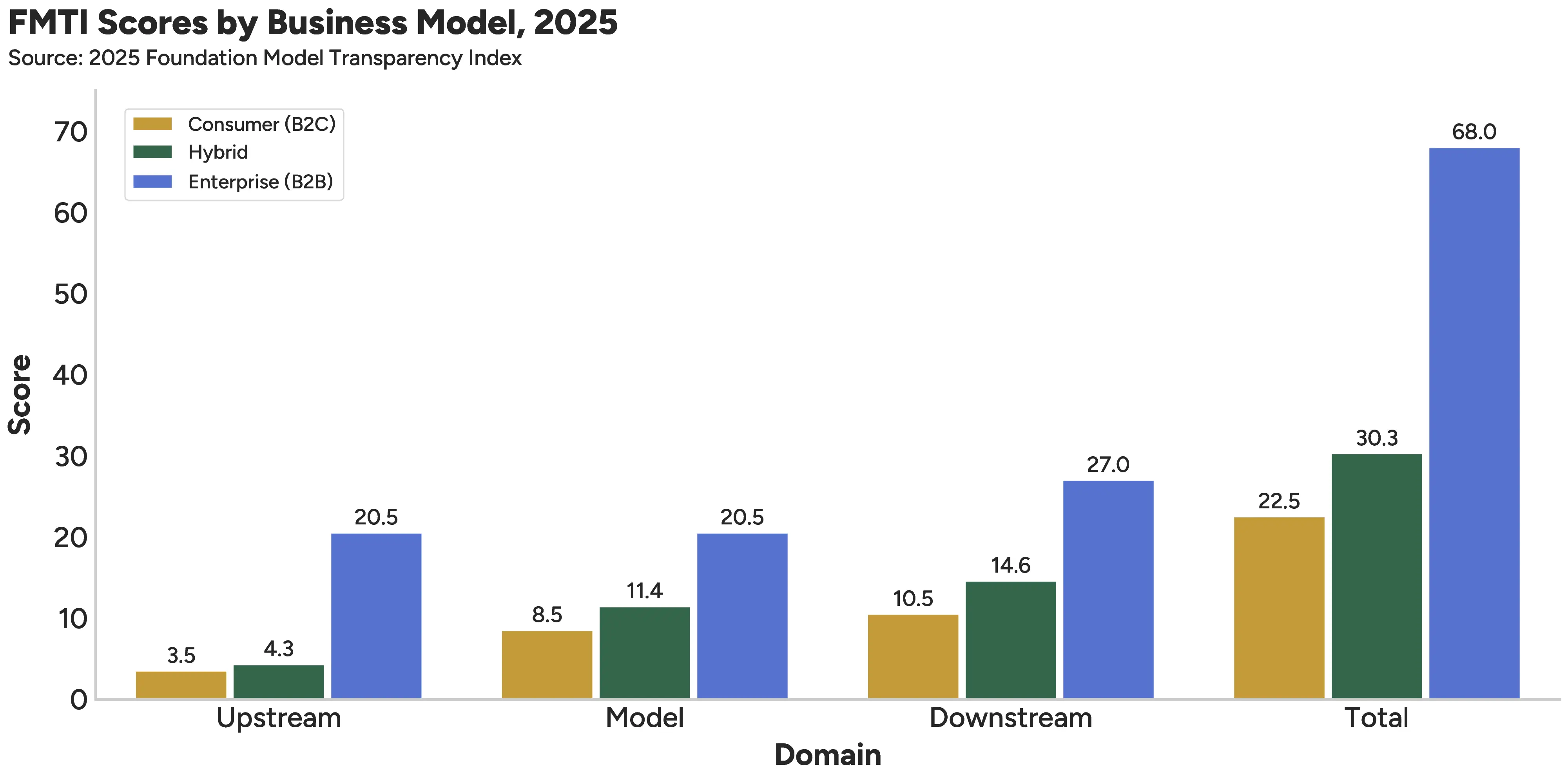

B2B companies are considerably more transparent. The gap is especially apparent in Upstream where the 4 B2B companies on average receive around five times as many points as the 7 hybrid and 2 consumer developers.

The FMTI advisory board works directly with the Index team, advising the design, execution, and

presentation

of subsequent iterations of the Index. Concretely, the Index team will meet regularly with the board to

discuss key decision points: How is transparency best measured, how should companies disclose the relevant

information publicly, how should scores be computed/presented, and how should findings be communicated to

companies, policymakers, and the public? The Index aims to measure transparency to bring about greater

transparency in the foundation model ecosystem: the board's collective wisdom will guide the Index team in

achieving these goals. (Home)

The Foundation Model Transparency Index was created by a group of AI researchers from Stanford University's Center for Research on Foundation Models (CRFM) and Institute on Human-Centered Artificial Intelligence (HAI), MIT Media Lab, and Princeton University's Center for Information Technology Policy. The shared interest that brought the group together is improving the transparency of foundation models. See author websites below.