The Foundation Model Transparency Index (May 2024)

A comprehensive assessment of the transparency of foundation model developers

Paper (May 2024) Transparency Reports Data Board Press 2023 Version

A comprehensive assessment of the transparency of foundation model developers

Paper (May 2024) Transparency Reports Data Board Press 2023 Version

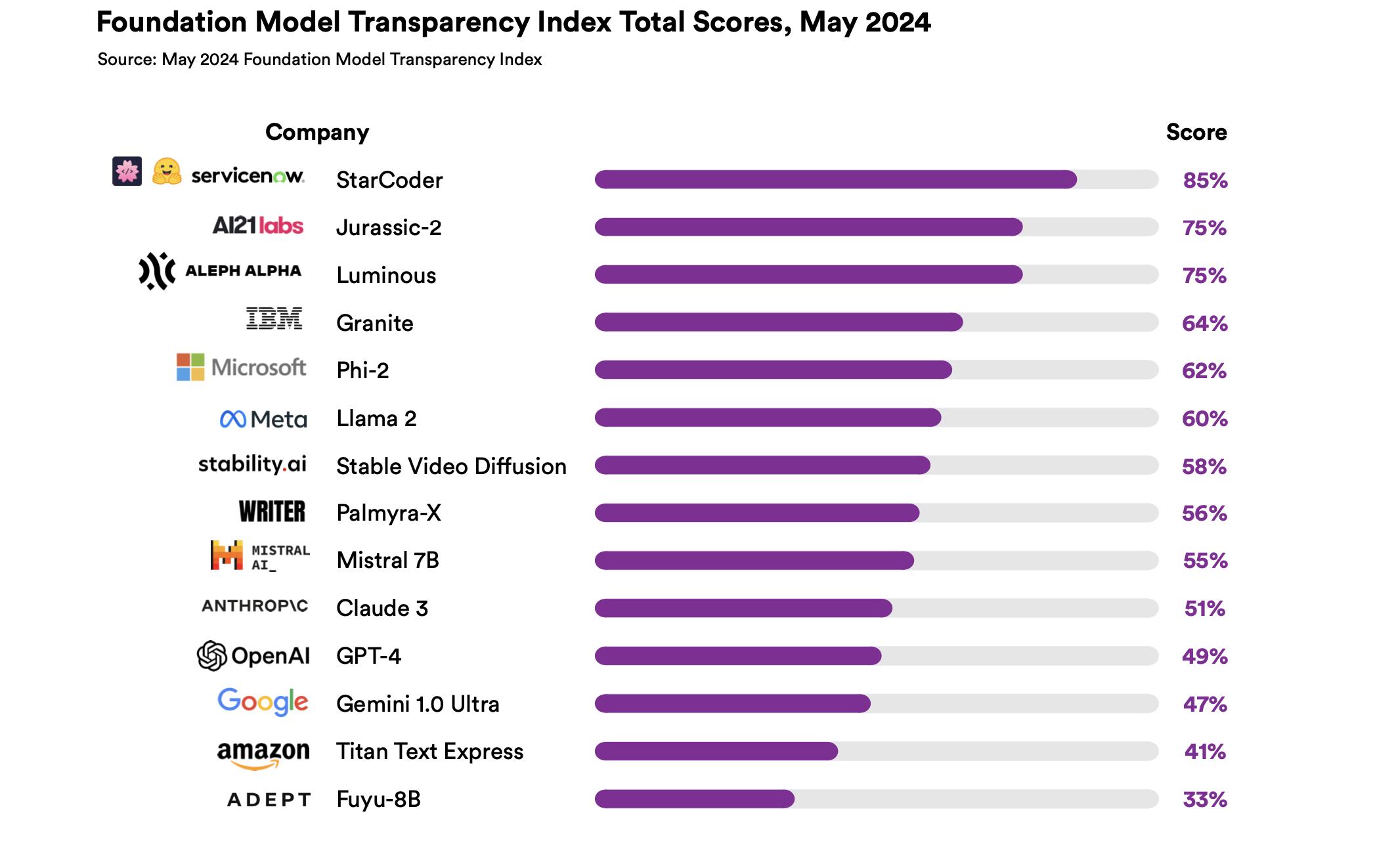

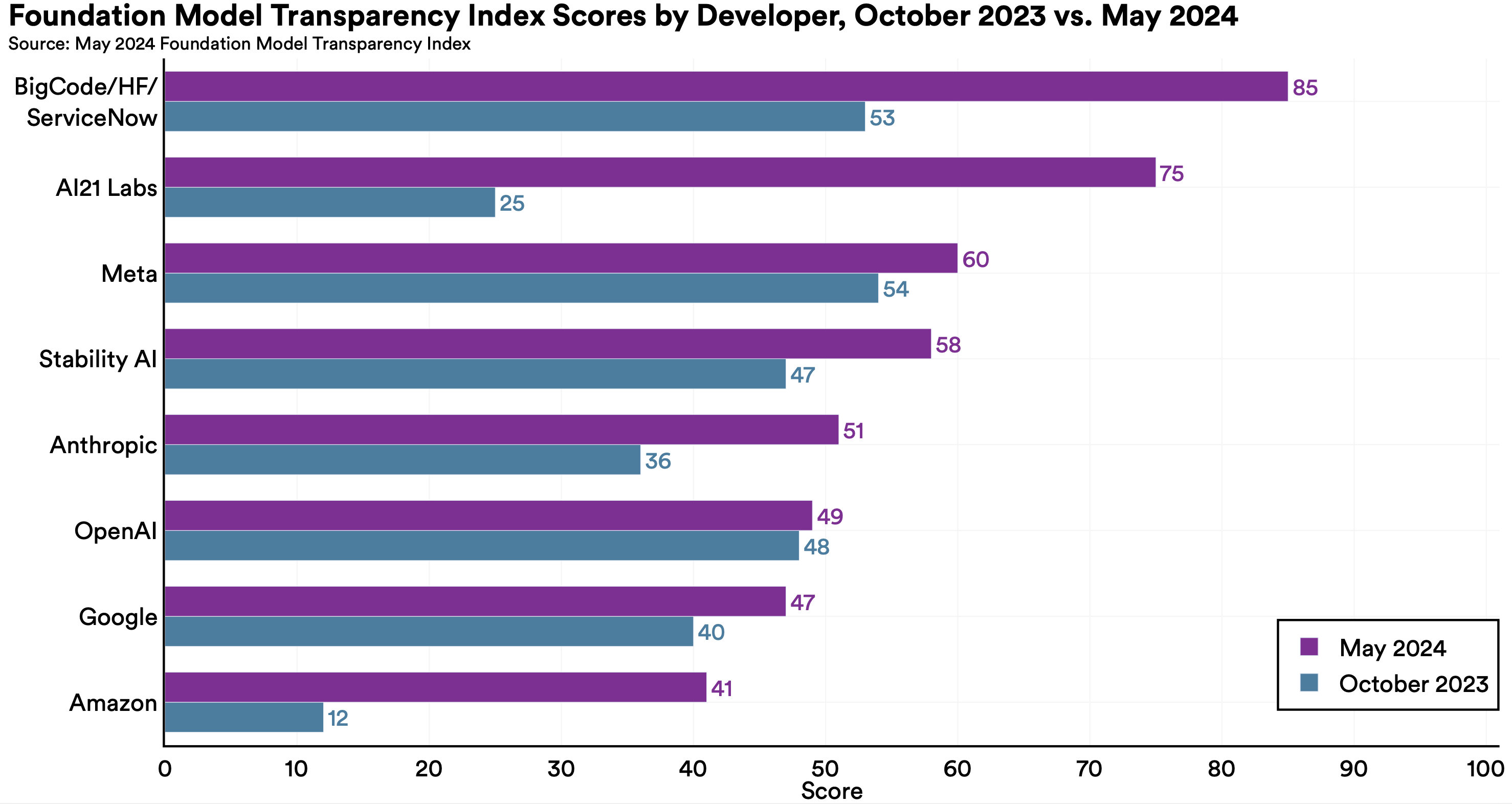

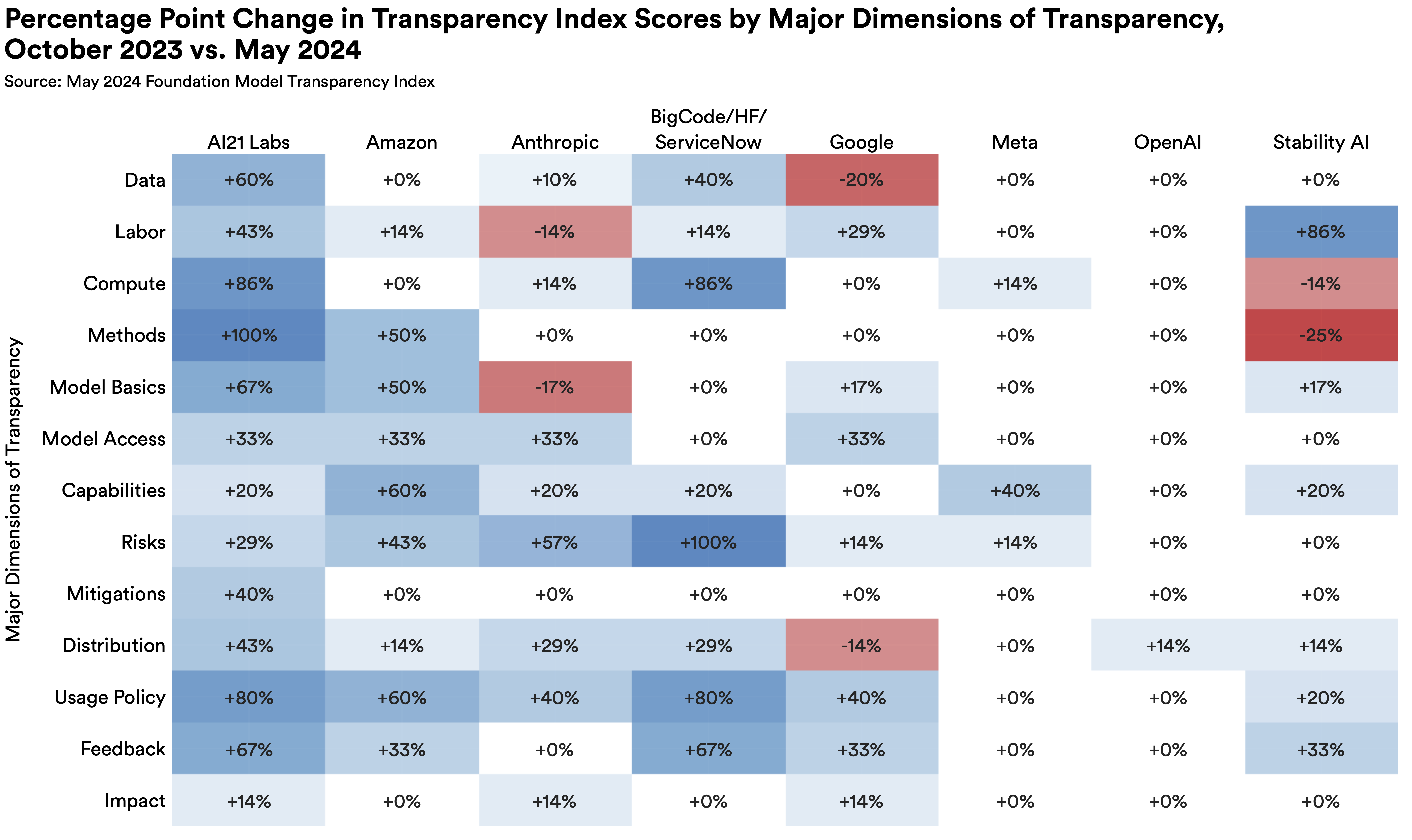

For the October 2023 Foundation Model Transparency Index, we searched through companies' documentation to see if the 10 companies we examined disclosed information related to each of the 100 transparency indicators. The big change in our process for the May 2024 Index is that we asked companies to provide us with a report and proactively disclose information about these transparency indicators. We reached out to 19 companies and 14 agreed to participate in our study.

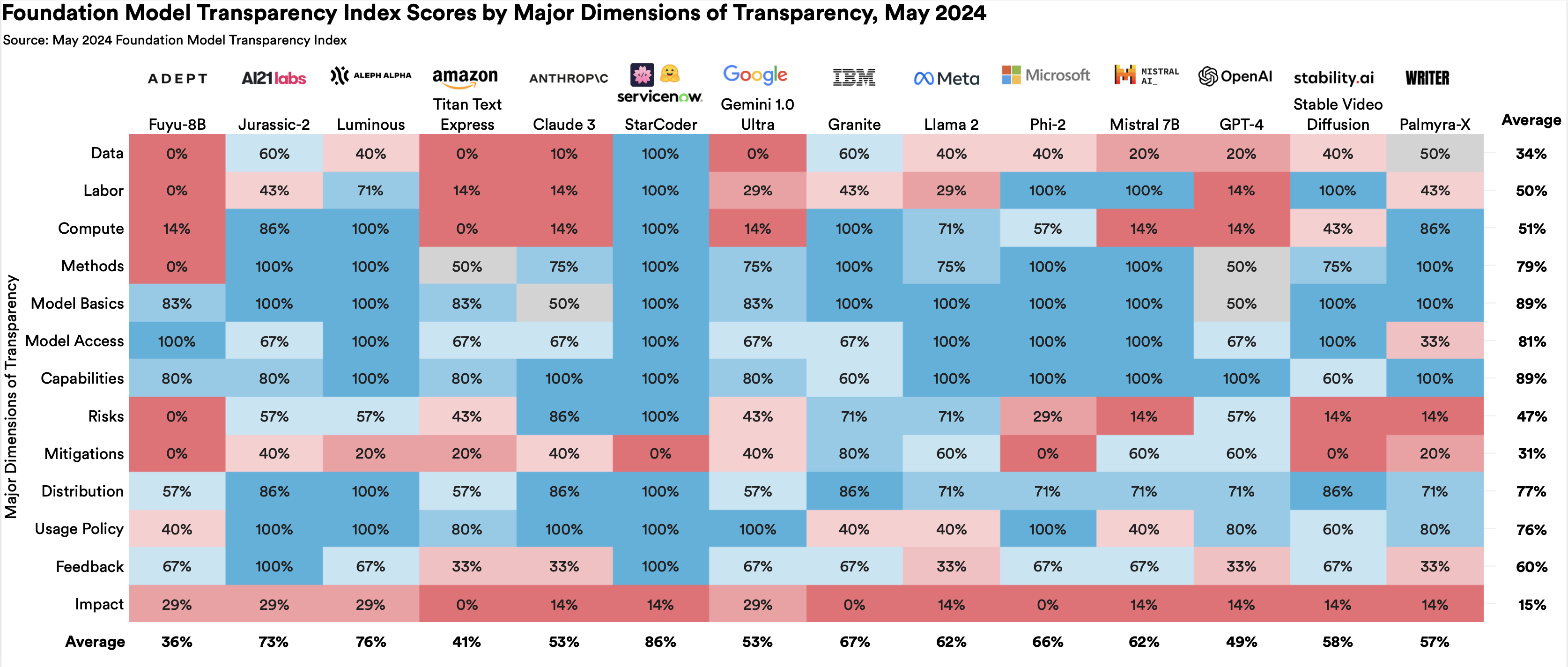

Disparity between most transparent and least transparent subdomains. The subdomain with the

most and the least transparency are separated by 86 percentage points. In the figure below, we show the 13

major subdomains of transparency across the 14 developers we evaluate.

Sustained opacity on specific issues. While overall trends indicate significant improvement in the

status quo for transparency, some areas have seen no real headway: information about data (copyright,

licenses, and PII), how effective companies' guardrails are (mitigation evaluations), and the downstream

impact of foundation models (how people use models and how many people use them in specific regions) all

remain quite opaque.

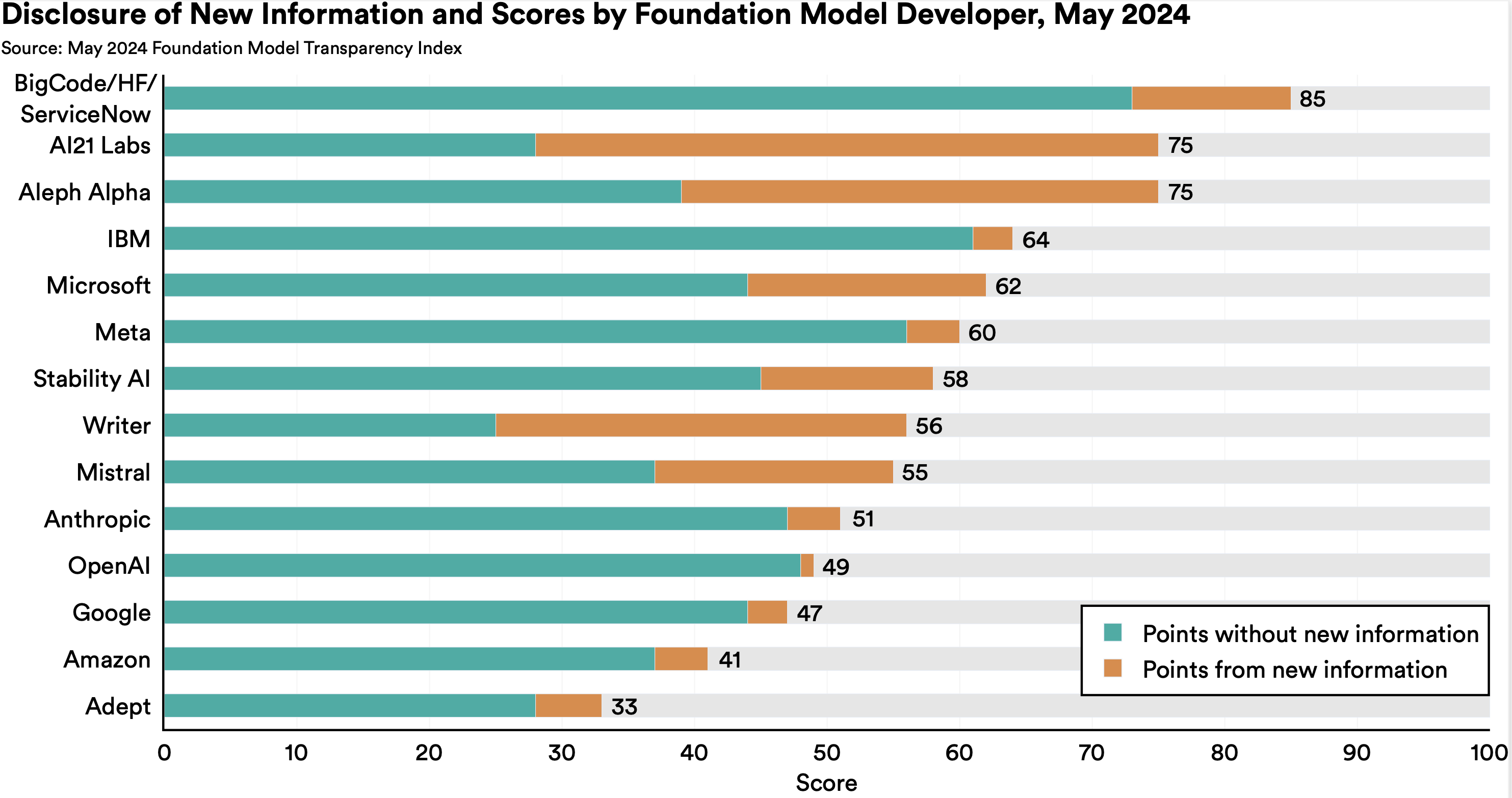

As part of the May 2024 version of FMTI, developers prepared reports including information related to the FMTI's 100 transparency indicators. We hope that these reports provide a model for how companies can regularly disclose important information about their foundation models.

In particular, developers' reports include a substantial amount of information that was not public before the beginning of the FMTI v1.1 process: on average, each company disclosed information about 16.6 indicators that was previously not disclosed. For example, four or more companies shared information that was previously not disclosed regarding the compute, energy, and any synthetic data used to build their flagship foundation models. These transparency reports provide a wealth of information that other researchers can analyze to learn more about the AI industry.

The FMTI advisory board will work directly with the Index team, advising the design, execution, and

presentation

of subsequent iterations of the Index. Concretely, the Index team will meet regularly with the board to

discuss key decision points: How is transparency best measured, how should companies disclose the relevant

information publicly, how should scores be computed/presented, and how should findings be communicated to

companies, policymakers, and the public? The Index aims to measure transparency to bring about greater

transparency in the foundation model ecosystem: the board's collective wisdom will guide the Index team in

achieving these goals. (Home)

The May 2024 Foundation Model Transparency Index was created by a group of eight AI researchers from Stanford University's Center for Research on Foundation Models (CRFM) and Institute on Human-Centered Artificial Intelligence (HAI), MIT Media Lab, and Princeton University's Center for Information Technology Policy. The shared interest that brought the group together is improving the transparency of foundation models. See author websites below.